-

-

[原创]Linux内核攻击 USMA 解析

-

发表于: 2025-12-5 13:02 5204

-

USMA : USER SPEACE MAPPING ATTACK (用户空间映射攻击)

本文关于 Linux 源码的版本均为:6.12.32

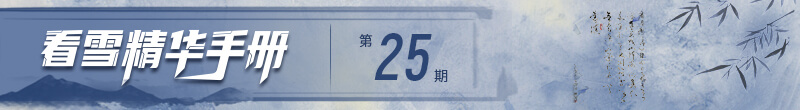

USMA 利用的是 socket 的 pgv 数组,所以我们在这里先深入了解一下 PacketSocket 的数据结构,在这里我们还是主要关注 pgv 的创建和映射,关于Socket其他板块的详细解析可以看我其他的文章。

我们这里主要关注 rx_ring/tx_ing,这是我们后续利用的关键数据结构。

所以相当于除去 struct socket 以外其他均为 packetsocket 独有的结构.

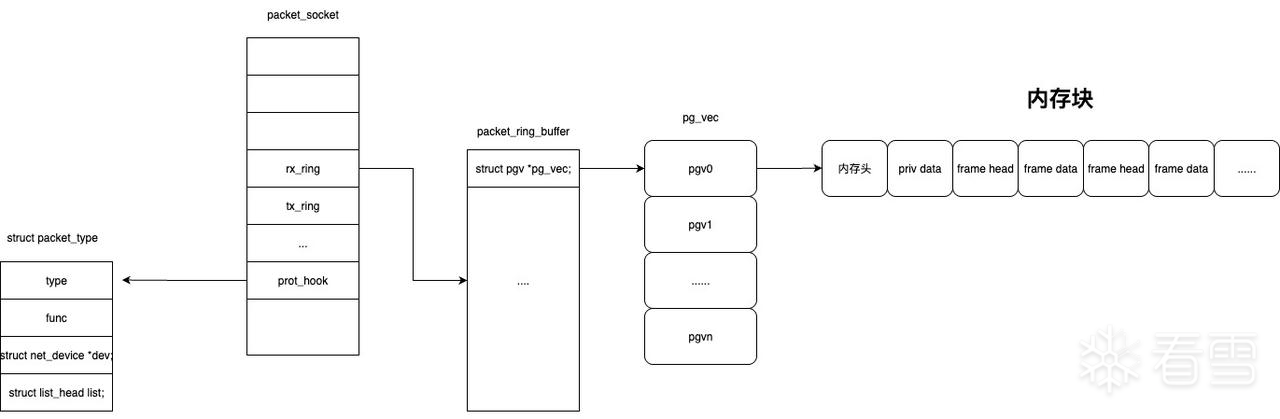

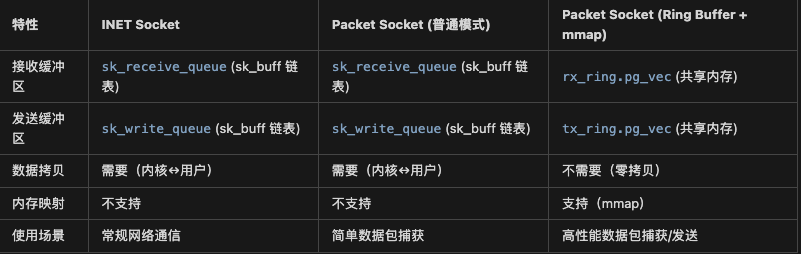

正常 Linux Socket 的传输数据需要进行用户层到内核层的拷贝,然后发送后又需要内核层到用户层的拷贝,比较消耗性能。所以类似于 zerocopy 的设计思想,Linux 在 PacketSocket 中设计了共享环形结构,让用户态可以直接将数据写入环形结构避免了拷贝操作。当然如果设置了 ring_buffer ,但是不使用mmap是没有使用到 zerocopy 的。

ring_buffer 是 packet_socket 独有的数据结构。当没有设置 PACKET_RX_RING 或 PACKET_TX_RING 时,Packet_socket 和正常的 socket 一样都使用标准的 sk_receive_queue/sk_write_queue。

用户通过 setsockopt 设置 PACKET_RX_RING 或 PACKET_TX_RING,最终调用 packet_set_ring,设置环形缓冲区。

pg_vec 是 kcalloc 分配

Pgv 的 buffer 是通过 alloc_one_pg_vec_page 分配

所以我们知道正常情况下 block 对应一个或者多个连续的page

获取 buffer 对应的 page,然后将 page 加入页表中,这样用户态访问对应虚拟地址就会去访问对应的page内容。

攻击的主要是 packet_mmap 映射这个过程。仔细研究 packet_mmap 我们可以发现,如果我们能够修改 pg_vec ,那么就可以任意将内核的 page 映射到用户态。比如最常见的利用方式,就是将内核代码段的page映射出来,实现任意代码修改。

并且通过上文我们知道由于 pg_vec 是根据 kcalloc(block_nr, sizeof(struct pgv), GFP_KERNEL | __GFP_NOWARN); 获取的。

sizeof(struct pgv)为 8字节,然后我们可以控制 block_nr 的数量,那么我们就可以控制 pgv 为任意大小的 object,非常有利于我们的堆喷。

当然在利用过程中,我们得仔细看看是否对 page 有什么检查

根据 enum pagetype 定义,以下类型的页会被 page_has_type() 检测到

由于 PacketSocket 需要 root 权限,所以正常用户权限下无法使用,需要用用户命名空间来实现伪root来成功调用 PacketSocket.但是也有些环境下Linux不支持用户命名空间。(用户命名空间依赖于 CONFIG_USER_NS 开启,这个选项是默认关闭)

检查的是在用户命名空间中的 CAP_NET_RAW。

struct packet_sock { /* struct sock has to be the first member of packet_sock */ struct sock sk; struct packet_fanout *fanout; union tpacket_stats_u stats; struct packet_ring_buffer rx_ring; struct packet_ring_buffer tx_ring; int copy_thresh; spinlock_t bind_lock; struct mutex pg_vec_lock; unsigned long flags; int ifindex; /* bound device */ u8 vnet_hdr_sz; __be16 num; struct packet_rollover *rollover; struct packet_mclist *mclist; atomic_long_t mapped; enum tpacket_versions tp_version; unsigned int tp_hdrlen; unsigned int tp_reserve; unsigned int tp_tstamp; struct completion skb_completion; struct net_device __rcu *cached_dev; struct packet_type prot_hook ____cacheline_aligned_in_smp; atomic_t tp_drops ____cacheline_aligned_in_smp;};struct packet_sock { /* struct sock has to be the first member of packet_sock */ struct sock sk; struct packet_fanout *fanout; union tpacket_stats_u stats; struct packet_ring_buffer rx_ring; struct packet_ring_buffer tx_ring; int copy_thresh; spinlock_t bind_lock; struct mutex pg_vec_lock; unsigned long flags; int ifindex; /* bound device */ u8 vnet_hdr_sz; __be16 num; struct packet_rollover *rollover; struct packet_mclist *mclist; atomic_long_t mapped; enum tpacket_versions tp_version; unsigned int tp_hdrlen; unsigned int tp_reserve; unsigned int tp_tstamp; struct completion skb_completion; struct net_device __rcu *cached_dev; struct packet_type prot_hook ____cacheline_aligned_in_smp; atomic_t tp_drops ____cacheline_aligned_in_smp;};struct packet_ring_buffer { struct pgv *pg_vec; unsigned int head; unsigned int frames_per_block; unsigned int frame_size; unsigned int frame_max; unsigned int pg_vec_order; unsigned int pg_vec_pages; unsigned int pg_vec_len; unsigned int __percpu *pending_refcnt; union { unsigned long *rx_owner_map; struct tpacket_kbdq_core prb_bdqc; };};struct packet_ring_buffer { struct pgv *pg_vec; unsigned int head; unsigned int frames_per_block; unsigned int frame_size; unsigned int frame_max; unsigned int pg_vec_order; unsigned int pg_vec_pages; unsigned int pg_vec_len; unsigned int __percpu *pending_refcnt; union { unsigned long *rx_owner_map; struct tpacket_kbdq_core prb_bdqc; };};struct pgv { char *buffer;};struct pgv { char *buffer;};用户空间: socket(AF_PACKET, SOCK_RAW, htons(ETH_P_ALL)) ↓sys_socket() ↓__sock_create() ↓pf->create() [packet_proto.create = packet_create] ↓packet_create() ├─ sk_alloc() // 分配 sock 和 packet_sock ├─ packet_alloc_pending() // 分配 pending 结构 ├─ sock_init_data() // 初始化 sock 数据 ├─ mutex_init(&po->pg_vec_lock) // 初始化 pg_vec 锁 ├─ po->prot_hook.func = packet_rcv // 设置接收函数 └─ __register_prot_hook() // 注册协议钩子 └─ dev_add_pack(&po->prot_hook) // 添加到协议栈用户空间: socket(AF_PACKET, SOCK_RAW, htons(ETH_P_ALL)) ↓sys_socket() ↓__sock_create() ↓pf->create() [packet_proto.create = packet_create] ↓packet_create() ├─ sk_alloc() // 分配 sock 和 packet_sock ├─ packet_alloc_pending() // 分配 pending 结构 ├─ sock_init_data() // 初始化 sock 数据 ├─ mutex_init(&po->pg_vec_lock) // 初始化 pg_vec 锁 ├─ po->prot_hook.func = packet_rcv // 设置接收函数 └─ __register_prot_hook() // 注册协议钩子 └─ dev_add_pack(&po->prot_hook) // 添加到协议栈用户空间: setsockopt(fd, SOL_PACKET, PACKET_RX_RING, &req, sizeof(req)) ↓sys_setsockopt() ↓sock_setsockopt() ↓packet_setsockopt() ↓case PACKET_RX_RING: packet_set_ring() ├─ 参数验证 ├─ order = get_order(req->tp_block_size) // 计算页阶数 ├─ alloc_pg_vec(req, order) // 分配 pg_vec 数组 │ ├─ kcalloc(block_nr, sizeof(struct pgv)) // 分配 pg_vec 数组 │ └─ for each block: │ └─ alloc_one_pg_vec_page(order) // 分配每个 block 的内存 │ ├─ __get_free_pages() // 优先使用连续物理页 │ ├─ vzalloc() // 失败则使用虚拟连续内存 │ └─ __get_free_pages() // 最后重试(允许 swap) ├─ init_prb_bdqc() [V3 only] // 初始化 V3 块描述符 │ ├─ p1->pkbdq = pg_vec // 设置 pg_vec 指针 │ └─ prb_open_block() // 打开第一个 block ├─ 临时卸载协议钩子 ├─ swap(rb->pg_vec, pg_vec) // 交换 pg_vec ├─ 设置 ring buffer 参数 └─ 重新注册协议钩子 └─ po->prot_hook.func = tpacket_rcv // 切换到 tpacket_rcv用户空间: setsockopt(fd, SOL_PACKET, PACKET_RX_RING, &req, sizeof(req)) ↓sys_setsockopt() ↓sock_setsockopt() ↓packet_setsockopt() ↓case PACKET_RX_RING: packet_set_ring() ├─ 参数验证 ├─ order = get_order(req->tp_block_size) // 计算页阶数 ├─ alloc_pg_vec(req, order) // 分配 pg_vec 数组 │ ├─ kcalloc(block_nr, sizeof(struct pgv)) // 分配 pg_vec 数组 │ └─ for each block: │ └─ alloc_one_pg_vec_page(order) // 分配每个 block 的内存 │ ├─ __get_free_pages() // 优先使用连续物理页 │ ├─ vzalloc() // 失败则使用虚拟连续内存 │ └─ __get_free_pages() // 最后重试(允许 swap) ├─ init_prb_bdqc() [V3 only] // 初始化 V3 块描述符 │ ├─ p1->pkbdq = pg_vec // 设置 pg_vec 指针 │ └─ prb_open_block() // 打开第一个 block ├─ 临时卸载协议钩子 ├─ swap(rb->pg_vec, pg_vec) // 交换 pg_vec ├─ 设置 ring buffer 参数 └─ 重新注册协议钩子 └─ po->prot_hook.func = tpacket_rcv // 切换到 tpacket_rcvstatic int packet_set_ring(struct sock *sk, union tpacket_req_u *req_u, int closing, int tx_ring){ struct pgv *pg_vec = NULL; struct packet_sock *po = pkt_sk(sk); unsigned long *rx_owner_map = NULL; int was_running, order = 0; struct packet_ring_buffer *rb; struct sk_buff_head *rb_queue; __be16 num; int err; /* Added to avoid minimal code churn */ struct tpacket_req *req = &req_u->req; rb = tx_ring ? &po->tx_ring : &po->rx_ring; rb_queue = tx_ring ? &sk->sk_write_queue : &sk->sk_receive_queue; err = -EBUSY; if (!closing) { if (atomic_long_read(&po->mapped)) goto out; if (packet_read_pending(rb)) goto out; } if (req->tp_block_nr) { unsigned int min_frame_size; /* Sanity tests and some calculations */ err = -EBUSY; if (unlikely(rb->pg_vec)) goto out; switch (po->tp_version) { case TPACKET_V1: po->tp_hdrlen = TPACKET_HDRLEN; break; case TPACKET_V2: po->tp_hdrlen = TPACKET2_HDRLEN; break; case TPACKET_V3: po->tp_hdrlen = TPACKET3_HDRLEN; break; } err = -EINVAL; if (unlikely((int)req->tp_block_size <= 0)) goto out; if (unlikely(!PAGE_ALIGNED(req->tp_block_size))) goto out; min_frame_size = po->tp_hdrlen + po->tp_reserve; if (po->tp_version >= TPACKET_V3 && req->tp_block_size < BLK_PLUS_PRIV((u64)req_u->req3.tp_sizeof_priv) + min_frame_size) goto out; if (unlikely(req->tp_frame_size < min_frame_size)) goto out; if (unlikely(req->tp_frame_size & (TPACKET_ALIGNMENT - 1))) goto out; rb->frames_per_block = req->tp_block_size / req->tp_frame_size; if (unlikely(rb->frames_per_block == 0)) goto out; if (unlikely(rb->frames_per_block > UINT_MAX / req->tp_block_nr)) goto out; if (unlikely((rb->frames_per_block * req->tp_block_nr) != req->tp_frame_nr)) goto out; err = -ENOMEM; order = get_order(req->tp_block_size); pg_vec = alloc_pg_vec(req, order); if (unlikely(!pg_vec)) goto out; switch (po->tp_version) { case TPACKET_V3: /* Block transmit is not supported yet */ if (!tx_ring) { init_prb_bdqc(po, rb, pg_vec, req_u); } else { struct tpacket_req3 *req3 = &req_u->req3; if (req3->tp_retire_blk_tov || req3->tp_sizeof_priv || req3->tp_feature_req_word) { err = -EINVAL; goto out_free_pg_vec; } } break; default: if (!tx_ring) { rx_owner_map = bitmap_alloc(req->tp_frame_nr, GFP_KERNEL | __GFP_NOWARN | __GFP_ZERO); if (!rx_owner_map) goto out_free_pg_vec; } break; } } /* Done */ else { err = -EINVAL; if (unlikely(req->tp_frame_nr)) goto out; } /* Detach socket from network */ spin_lock(&po->bind_lock); was_running = packet_sock_flag(po, PACKET_SOCK_RUNNING); num = po->num; if (was_running) { WRITE_ONCE(po->num, 0); __unregister_prot_hook(sk, false); } spin_unlock(&po->bind_lock); synchronize_net(); err = -EBUSY; mutex_lock(&po->pg_vec_lock); if (closing || atomic_long_read(&po->mapped) == 0) { err = 0; spin_lock_bh(&rb_queue->lock); swap(rb->pg_vec, pg_vec); if (po->tp_version <= TPACKET_V2) swap(rb->rx_owner_map, rx_owner_map); rb->frame_max = (req->tp_frame_nr - 1); rb->head = 0; rb->frame_size = req->tp_frame_size; spin_unlock_bh(&rb_queue->lock); swap(rb->pg_vec_order, order); swap(rb->pg_vec_len, req->tp_block_nr); rb->pg_vec_pages = req->tp_block_size/PAGE_SIZE; po->prot_hook.func = (po->rx_ring.pg_vec) ? tpacket_rcv : packet_rcv; skb_queue_purge(rb_queue); if (atomic_long_read(&po->mapped)) pr_err("packet_mmap: vma is busy: %ld\n", atomic_long_read(&po->mapped)); } mutex_unlock(&po->pg_vec_lock); spin_lock(&po->bind_lock); if (was_running) { WRITE_ONCE(po->num, num); register_prot_hook(sk); } spin_unlock(&po->bind_lock); if (pg_vec && (po->tp_version > TPACKET_V2)) { /* Because we don't support block-based V3 on tx-ring */ if (!tx_ring) prb_shutdown_retire_blk_timer(po, rb_queue); }out_free_pg_vec: if (pg_vec) { bitmap_free(rx_owner_map); free_pg_vec(pg_vec, order, req->tp_block_nr); }out: return err;}static int packet_set_ring(struct sock *sk, union tpacket_req_u *req_u, int closing, int tx_ring){ struct pgv *pg_vec = NULL; struct packet_sock *po = pkt_sk(sk); unsigned long *rx_owner_map = NULL; int was_running, order = 0; struct packet_ring_buffer *rb; struct sk_buff_head *rb_queue; __be16 num; int err; /* Added to avoid minimal code churn */ struct tpacket_req *req = &req_u->req; rb = tx_ring ? &po->tx_ring : &po->rx_ring; rb_queue = tx_ring ? &sk->sk_write_queue : &sk->sk_receive_queue; err = -EBUSY; if (!closing) { if (atomic_long_read(&po->mapped)) goto out; if (packet_read_pending(rb)) goto out; } if (req->tp_block_nr) { unsigned int min_frame_size; /* Sanity tests and some calculations */ err = -EBUSY; if (unlikely(rb->pg_vec)) goto out; switch (po->tp_version) { case TPACKET_V1: po->tp_hdrlen = TPACKET_HDRLEN; break; case TPACKET_V2: po->tp_hdrlen = TPACKET2_HDRLEN; break; case TPACKET_V3: po->tp_hdrlen = TPACKET3_HDRLEN; break; } err = -EINVAL; if (unlikely((int)req->tp_block_size <= 0)) goto out; if (unlikely(!PAGE_ALIGNED(req->tp_block_size))) goto out; min_frame_size = po->tp_hdrlen + po->tp_reserve; if (po->tp_version >= TPACKET_V3 && req->tp_block_size < BLK_PLUS_PRIV((u64)req_u->req3.tp_sizeof_priv) + min_frame_size) goto out; if (unlikely(req->tp_frame_size < min_frame_size)) goto out; if (unlikely(req->tp_frame_size & (TPACKET_ALIGNMENT - 1))) goto out; rb->frames_per_block = req->tp_block_size / req->tp_frame_size; if (unlikely(rb->frames_per_block == 0)) goto out; if (unlikely(rb->frames_per_block > UINT_MAX / req->tp_block_nr)) goto out; if (unlikely((rb->frames_per_block * req->tp_block_nr) != req->tp_frame_nr)) goto out; err = -ENOMEM; order = get_order(req->tp_block_size); pg_vec = alloc_pg_vec(req, order); if (unlikely(!pg_vec)) goto out; switch (po->tp_version) { case TPACKET_V3: /* Block transmit is not supported yet */ if (!tx_ring) { init_prb_bdqc(po, rb, pg_vec, req_u); } else { struct tpacket_req3 *req3 = &req_u->req3; if (req3->tp_retire_blk_tov || req3->tp_sizeof_priv || req3->tp_feature_req_word) { err = -EINVAL; goto out_free_pg_vec; } } break; default: if (!tx_ring) { rx_owner_map = bitmap_alloc(req->tp_frame_nr, GFP_KERNEL | __GFP_NOWARN | __GFP_ZERO); if (!rx_owner_map) goto out_free_pg_vec; } break; } } /* Done */ else { err = -EINVAL; if (unlikely(req->tp_frame_nr)) goto out; } /* Detach socket from network */ spin_lock(&po->bind_lock); was_running = packet_sock_flag(po, PACKET_SOCK_RUNNING); num = po->num; if (was_running) { WRITE_ONCE(po->num, 0); __unregister_prot_hook(sk, false); } spin_unlock(&po->bind_lock); synchronize_net(); err = -EBUSY; mutex_lock(&po->pg_vec_lock); if (closing || atomic_long_read(&po->mapped) == 0) { err = 0; spin_lock_bh(&rb_queue->lock); swap(rb->pg_vec, pg_vec); if (po->tp_version <= TPACKET_V2) swap(rb->rx_owner_map, rx_owner_map); rb->frame_max = (req->tp_frame_nr - 1); rb->head = 0; rb->frame_size = req->tp_frame_size; spin_unlock_bh(&rb_queue->lock); swap(rb->pg_vec_order, order); swap(rb->pg_vec_len, req->tp_block_nr); rb->pg_vec_pages = req->tp_block_size/PAGE_SIZE; po->prot_hook.func = (po->rx_ring.pg_vec) ? tpacket_rcv : packet_rcv; skb_queue_purge(rb_queue); if (atomic_long_read(&po->mapped)) pr_err("packet_mmap: vma is busy: %ld\n", atomic_long_read(&po->mapped)); } mutex_unlock(&po->pg_vec_lock); spin_lock(&po->bind_lock); if (was_running) { WRITE_ONCE(po->num, num); register_prot_hook(sk); } spin_unlock(&po->bind_lock); if (pg_vec && (po->tp_version > TPACKET_V2)) { /* Because we don't support block-based V3 on tx-ring */ if (!tx_ring) prb_shutdown_retire_blk_timer(po, rb_queue); }out_free_pg_vec: if (pg_vec) { bitmap_free(rx_owner_map); free_pg_vec(pg_vec, order, req->tp_block_nr); }out: return err;}static struct pgv *alloc_pg_vec(struct tpacket_req *req, int order){ unsigned int block_nr = req->tp_block_nr; // block 数量 struct pgv *pg_vec; // pgv 数组指针 int i; // 分配 pg_vec 数组,大小为 block_nr 个 pgv 结构体 pg_vec = kcalloc(block_nr, sizeof(struct pgv), GFP_KERNEL | __GFP_NOWARN); if (unlikely(!pg_vec)) goto out; // 为每个 block 分配内存页 for (i = 0; i < block_nr; i++) { // 分配一个 block 的内存(2^order 页) pg_vec[i].buffer = alloc_one_pg_vec_page(order); if (unlikely(!pg_vec[i].buffer)) goto out_free_pgvec; // 失败则释放已分配的 }out: return pg_vec;out_free_pgvec: // 释放已分配的 pg_vec(包括所有已分配的 buffer) free_pg_vec(pg_vec, order, block_nr); pg_vec = NULL; goto out;}static struct pgv *alloc_pg_vec(struct tpacket_req *req, int order){ unsigned int block_nr = req->tp_block_nr; // block 数量 struct pgv *pg_vec; // pgv 数组指针 int i; // 分配 pg_vec 数组,大小为 block_nr 个 pgv 结构体 pg_vec = kcalloc(block_nr, sizeof(struct pgv), GFP_KERNEL | __GFP_NOWARN); if (unlikely(!pg_vec)) goto out; // 为每个 block 分配内存页 for (i = 0; i < block_nr; i++) { // 分配一个 block 的内存(2^order 页) pg_vec[i].buffer = alloc_one_pg_vec_page(order); if (unlikely(!pg_vec[i].buffer)) goto out_free_pgvec; // 失败则释放已分配的 }out: return pg_vec;out_free_pgvec: // 释放已分配的 pg_vec(包括所有已分配的 buffer) free_pg_vec(pg_vec, order, block_nr); pg_vec = NULL; goto out;}用户空间: mmap(fd, ...) ↓sys_mmap() ↓packet_mmap() ├─ mutex_lock(&po->pg_vec_lock) ├─ 计算 expected_size = pg_vec_len * pg_vec_pages * PAGE_SIZE ├─ for each ring buffer: │ └─ for each pg_vec[i]: │ └─ for each page in block: │ └─ vm_insert_page() // 将物理页映射到用户空间 ├─ atomic_long_inc(&po->mapped) // 增加映射计数 └─ mutex_unlock(&po->pg_vec_lock)用户空间: mmap(fd, ...) ↓sys_mmap() ↓packet_mmap() ├─ mutex_lock(&po->pg_vec_lock) ├─ 计算 expected_size = pg_vec_len * pg_vec_pages * PAGE_SIZE ├─ for each ring buffer: │ └─ for each pg_vec[i]: │ └─ for each page in block: │ └─ vm_insert_page() // 将物理页映射到用户空间 ├─ atomic_long_inc(&po->mapped) // 增加映射计数 └─ mutex_unlock(&po->pg_vec_lock)用户空间访问 start 地址 | vCPU 发出虚拟地址 start | vMMU 查询页表 | | 页表项 (PTE) 已由 set_pte_at() 建立 | PTE 包含 page 的物理地址 | vMMU 将虚拟地址转换为物理地址 | v访问物理内存(buffer 对应的 page)用户空间访问 start 地址 | vCPU 发出虚拟地址 start | vMMU 查询页表 | | 页表项 (PTE) 已由 set_pte_at() 建立 | PTE 包含 page 的物理地址 | vMMU 将虚拟地址转换为物理地址 | v访问物理内存(buffer 对应的 page)/** * packet_mmap - 将 packet socket 的 ring buffer 映射到用户空间 * * @file: socket 对应的文件结构体指针 * @sock: socket 结构体指针 * @vma: 虚拟内存区域结构体,描述用户空间要映射的内存区域 * * 功能说明: * 1. 将 packet_sock 中的 rx_ring 和 tx_ring 的物理页映射到用户空间 * 2. 实现零拷贝:用户态可以直接访问内核的 ring buffer,无需数据拷贝 * 3. 映射后,用户态可以通过直接读写 ring buffer 来收发数据包 * * 返回值: * 0 - 成功 * -EINVAL - 参数错误(vm_pgoff 不为0,或大小不匹配,或没有 ring buffer) * 其他 - vm_insert_page 失败的错误码 */static int packet_mmap(struct file *file, struct socket *sock, struct vm_area_struct *vma){ struct sock *sk = sock->sk; // 获取底层 sock 结构 struct packet_sock *po = pkt_sk(sk); // 转换为 packet_sock unsigned long size, expected_size; // size: 用户请求的映射大小 // expected_size: 实际需要的映射大小 struct packet_ring_buffer *rb; // 遍历用的 ring buffer 指针 unsigned long start; // 当前映射的起始虚拟地址 int err = -EINVAL; // 错误码,默认参数错误 int i; /* * 检查 vm_pgoff(页偏移量) * vm_pgoff 必须为 0,表示从文件/设备的开头开始映射 * 如果不为 0,说明用户想要映射文件的某个偏移位置,但 packet socket 不支持 */ if (vma->vm_pgoff) return -EINVAL; /* * 获取 pg_vec_lock 互斥锁 * 这个锁保护 pg_vec 的并发访问,防止在映射过程中 ring buffer 被修改或释放 */ mutex_lock(&po->pg_vec_lock); /* * 第一步:计算期望的映射大小 * 遍历 rx_ring 和 tx_ring,累加所有 ring buffer 的总大小 * * 计算公式: * expected_size = Σ (pg_vec_len * pg_vec_pages * PAGE_SIZE) * * 其中: * - pg_vec_len: block 的数量(pg_vec 数组的长度) * - pg_vec_pages: 每个 block 包含的页数 * - PAGE_SIZE: 页大小(通常 4KB) * * 例如:如果有 4 个 block,每个 block 是 2 页(8KB),则: * expected_size = 4 * 2 * 4096 = 32KB */ expected_size = 0; for (rb = &po->rx_ring; rb <= &po->tx_ring; rb++) { // 遍历 rx_ring 和 tx_ring if (rb->pg_vec) { // 如果该 ring buffer 已分配 expected_size += rb->pg_vec_len // block 数量 * rb->pg_vec_pages // 每个 block 的页数 * PAGE_SIZE; // 页大小 } } /* * 检查:如果没有 ring buffer,无法映射 * 用户必须先通过 setsockopt(PACKET_RX_RING/PACKET_TX_RING) 设置 ring buffer */ if (expected_size == 0) goto out; /* * 第二步:验证用户请求的映射大小 * vma->vm_end - vma->vm_start 是用户空间 mmap 调用时请求的映射大小 * 这个大小必须精确等于 expected_size,不能多也不能少 * * 为什么必须精确匹配? * - 如果用户请求的大小小于实际大小,会导致部分 ring buffer 无法映射 * - 如果用户请求的大小大于实际大小,会导致访问越界 * - 精确匹配确保用户空间和内核空间对映射区域的理解一致 */ size = vma->vm_end - vma->vm_start; // 用户请求的映射大小 if (size != expected_size) goto out; /* * 第三步:执行实际的页映射操作 * 将每个 ring buffer 中的每个 block 的每一页都映射到用户空间 * * 映射顺序: * 1. 先映射 rx_ring 的所有页 * 2. 再映射 tx_ring 的所有页 * 3. 在每个 ring buffer 内,按照 pg_vec 数组的顺序映射 * 4. 在每个 block 内,按照页的顺序映射 */ start = vma->vm_start; // 从用户空间请求的起始地址开始 for (rb = &po->rx_ring; rb <= &po->tx_ring; rb++) { // 遍历 rx_ring 和 tx_ring if (rb->pg_vec == NULL) // 跳过未分配的 ring buffer continue; /* * 遍历该 ring buffer 中的每个 block(pg_vec 数组的每个元素) * pg_vec_len 是 block 的数量 */ for (i = 0; i < rb->pg_vec_len; i++) { struct page *page; // 要映射的物理页结构 void *kaddr = rb->pg_vec[i].buffer; // 当前 block 的内核虚拟地址 int pg_num; // block 内的页索引 /* * 遍历该 block 中的每一页 * pg_vec_pages 是该 block 包含的页数 * * 例如:如果 block_size = 8192 (2页),则 pg_vec_pages = 2 */ for (pg_num = 0; pg_num < rb->pg_vec_pages; pg_num++) { /* * pgv_to_page: 将内核虚拟地址转换为 page 结构体指针 * * 这个函数处理两种情况: * 1. 如果 buffer 是通过 __get_free_pages 分配的(连续物理页) * 使用 virt_to_page 转换 * 2. 如果 buffer 是通过 vmalloc 分配的(虚拟连续,物理可能不连续) * 使用 vmalloc_to_page 转换 */ page = pgv_to_page(kaddr); /* * vm_insert_page: 将物理页插入到用户空间的虚拟地址空间 * * @vma: 虚拟内存区域 * @start: 用户空间的虚拟地址(页对齐) * @page: 要映射的物理页 * * 功能: * - 建立页表项,将用户空间的虚拟地址 start 映射到物理页 page * - 用户空间访问 start 时,会直接访问到 page 对应的物理内存 * - 实现了内核和用户空间共享同一块物理内存(零拷贝) * * 返回值: * 0 - 成功 * 负数 - 失败(如内存不足、地址冲突等) */ err = vm_insert_page(vma, start, page); if (unlikely(err)) goto out; // 映射失败,跳转到错误处理 /* * 更新映射地址和内核地址 * - start: 用户空间下一个要映射的虚拟地址 * - kaddr: 内核空间下一个页的虚拟地址 */ start += PAGE_SIZE; // 用户空间地址前进一页 kaddr += PAGE_SIZE; // 内核空间地址前进一页 } } } /* * 第四步:映射成功后的收尾工作 * * 1. 增加映射计数 * mapped 计数器用于跟踪有多少个 VMA 映射了这个 socket * 当 socket 关闭或 ring buffer 被修改时,需要检查这个计数 * 如果 mapped > 0,说明用户空间还在使用,不能释放 ring buffer */ atomic_long_inc(&po->mapped); /* * 2. 设置 VMA 操作回调函数 * packet_mmap_ops 包含两个回调: * - .open: 当 VMA 被 fork 时调用(增加 mapped 计数) * - .close: 当 VMA 被关闭时调用(减少 mapped 计数) * * 这样可以在进程 fork 或退出时正确维护 mapped 计数 */ vma->vm_ops = &packet_mmap_ops; err = 0; // 成功out: /* * 释放互斥锁并返回错误码 * 无论成功还是失败,都要释放锁 */ mutex_unlock(&po->pg_vec_lock); return err;}/** * packet_mmap - 将 packet socket 的 ring buffer 映射到用户空间 * * @file: socket 对应的文件结构体指针 * @sock: socket 结构体指针 * @vma: 虚拟内存区域结构体,描述用户空间要映射的内存区域 * * 功能说明: * 1. 将 packet_sock 中的 rx_ring 和 tx_ring 的物理页映射到用户空间 * 2. 实现零拷贝:用户态可以直接访问内核的 ring buffer,无需数据拷贝 * 3. 映射后,用户态可以通过直接读写 ring buffer 来收发数据包 * * 返回值: * 0 - 成功 * -EINVAL - 参数错误(vm_pgoff 不为0,或大小不匹配,或没有 ring buffer) * 其他 - vm_insert_page 失败的错误码 */static int packet_mmap(struct file *file, struct socket *sock, struct vm_area_struct *vma){ struct sock *sk = sock->sk; // 获取底层 sock 结构 struct packet_sock *po = pkt_sk(sk); // 转换为 packet_sock unsigned long size, expected_size; // size: 用户请求的映射大小 // expected_size: 实际需要的映射大小 struct packet_ring_buffer *rb; // 遍历用的 ring buffer 指针 unsigned long start; // 当前映射的起始虚拟地址 int err = -EINVAL; // 错误码,默认参数错误 int i; /* * 检查 vm_pgoff(页偏移量) * vm_pgoff 必须为 0,表示从文件/设备的开头开始映射 * 如果不为 0,说明用户想要映射文件的某个偏移位置,但 packet socket 不支持 */ if (vma->vm_pgoff) return -EINVAL; /* * 获取 pg_vec_lock 互斥锁 * 这个锁保护 pg_vec 的并发访问,防止在映射过程中 ring buffer 被修改或释放 */ mutex_lock(&po->pg_vec_lock); /* * 第一步:计算期望的映射大小 * 遍历 rx_ring 和 tx_ring,累加所有 ring buffer 的总大小 * * 计算公式: * expected_size = Σ (pg_vec_len * pg_vec_pages * PAGE_SIZE) * * 其中: * - pg_vec_len: block 的数量(pg_vec 数组的长度) * - pg_vec_pages: 每个 block 包含的页数 * - PAGE_SIZE: 页大小(通常 4KB) * * 例如:如果有 4 个 block,每个 block 是 2 页(8KB),则: * expected_size = 4 * 2 * 4096 = 32KB */ expected_size = 0; for (rb = &po->rx_ring; rb <= &po->tx_ring; rb++) { // 遍历 rx_ring 和 tx_ring if (rb->pg_vec) { // 如果该 ring buffer 已分配 expected_size += rb->pg_vec_len // block 数量 * rb->pg_vec_pages // 每个 block 的页数 * PAGE_SIZE; // 页大小 } } /* * 检查:如果没有 ring buffer,无法映射 * 用户必须先通过 setsockopt(PACKET_RX_RING/PACKET_TX_RING) 设置 ring buffer */ if (expected_size == 0) goto out; /* * 第二步:验证用户请求的映射大小 * vma->vm_end - vma->vm_start 是用户空间 mmap 调用时请求的映射大小 * 这个大小必须精确等于 expected_size,不能多也不能少 * * 为什么必须精确匹配? * - 如果用户请求的大小小于实际大小,会导致部分 ring buffer 无法映射 * - 如果用户请求的大小大于实际大小,会导致访问越界 * - 精确匹配确保用户空间和内核空间对映射区域的理解一致 */ size = vma->vm_end - vma->vm_start; // 用户请求的映射大小 if (size != expected_size) goto out; /* * 第三步:执行实际的页映射操作 * 将每个 ring buffer 中的每个 block 的每一页都映射到用户空间 * * 映射顺序: * 1. 先映射 rx_ring 的所有页 * 2. 再映射 tx_ring 的所有页 * 3. 在每个 ring buffer 内,按照 pg_vec 数组的顺序映射 * 4. 在每个 block 内,按照页的顺序映射 */ start = vma->vm_start; // 从用户空间请求的起始地址开始