今天玩着王者农药、突然女神给我来视频了,可是我正在打游戏呀。我国服花木兰怎么可以挂机!!!

最后这局我胜利了,但是我失去了一个女神。心里很失落,于是我想出了一个想法。如果每次女神给我视频 我都是接了 陪她说话、唱歌给她听,那就可以很容易上位了,对,没错的。

首先看一下安卓视频直播的流程(图片网上百度的)

可以看出来、获取数据的源头其实就是在摄像头哪里、至于后面的各种流程都可以无视了、那么就是说 只要把摄像头的数据替换掉,那就直接成功了!!

首先考虑的事情,是怎么把摄像头的数据替换。

如果想实现上面的想法、第一步是写代码把摄像头的数据先获取到

这个View可以直接重内存或者DMA等硬件接口获取所得的图像数据,是个非常重要的绘图容器,所以,开发相机应用一般都是使用它

重写的方法:

可以把它看成是surface的控制器,用来操纵surface。处理它的Canvas上画的效果和动画,控制表面,大小,像素等等。

可以看做Surface和Texture的组合,是将图形生产者的数据送到Texture,然后是由应用程序自己来处理

具体片段代码

这个时候就可以实现实时显示摄像头的数据了

#Camera.java源码分析

这里经过修改源码打印分析,每次拍照或者录像的时候 都会走

CAMERA_MSG_RAW_IMAGE

CAMERA_MSG_COMPRESSED_IMAGE

CAMERA_MSG_POSTVIEW_FRAME

三个事件

其中的

(byte[])msg.obj就是图片数据

这里还有两个核心的native函数

总结:直接修改上面分析的函数应该就可以了

1:ffmepg去播放视频推数据

2:安卓原生支持

结合SurfaceView进行播放。其中通过SurfaceView显示视频的画面,通过MediaPlayer来设置播放参数、并控制视频的播放操作。

然后百度了一个demo 实现通过SurfaceView显示视频的画面。

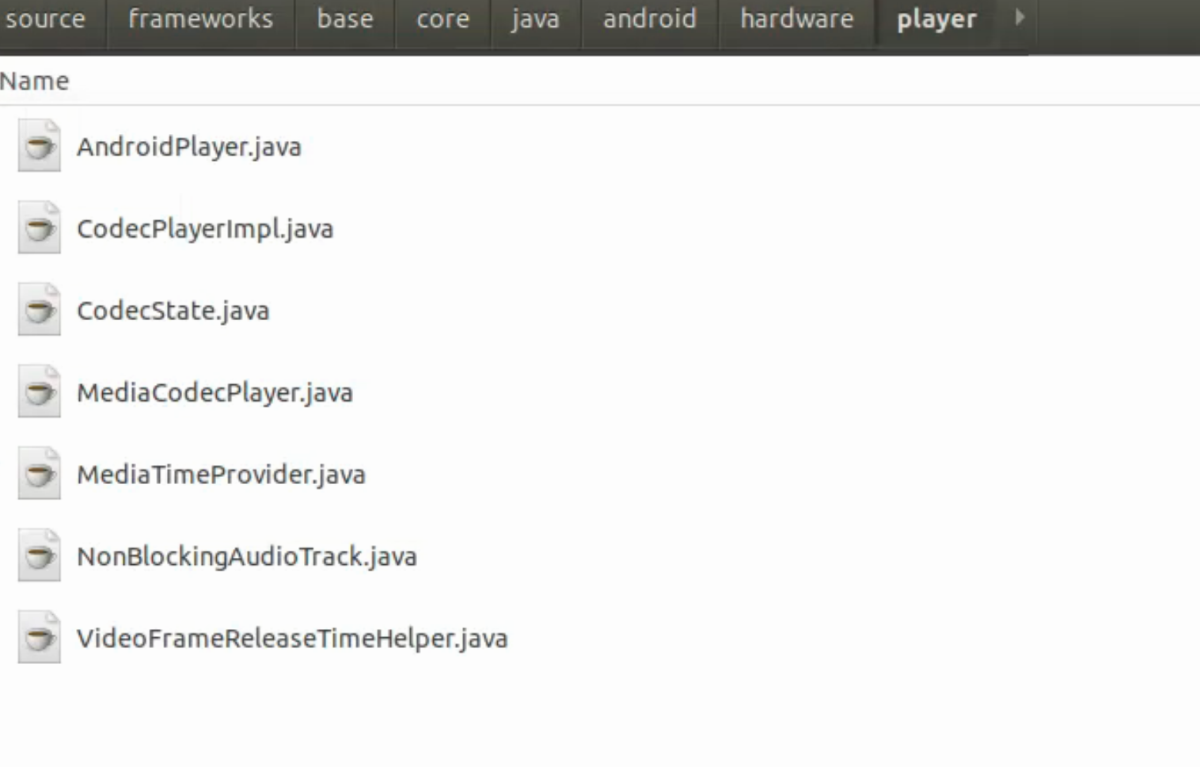

这里我使用原生自带的MediaPlayer 和 自定义实现的一个Player 其实效果差不多 然后把这些类组成一下 VirCamera

调数据yuv数据和存储为yuv420p

那么现在就是见神奇的时候

最后编译 开始测试,

发现我的女神一直没给我视频、于是换了一个直播平台试试

嘻嘻应该是成功 坐等女神视频。。。

surfaceChanged(SurfaceHolder holderformatwidthheight){}

surfaceCreated(SurfaceHolder holder){}

surfaceDestroyed(SurfaceHolder holder) {}

surfaceChanged(SurfaceHolder holderformatwidthheight){}

surfaceCreated(SurfaceHolder holder){}

surfaceDestroyed(SurfaceHolder holder) {}

SurfaceHolder holder = mSurfaceView.getHolder();

holder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

holder.addCallback(new SurfaceHolder.Callback() {

@Override

public void surfaceDestroyed(SurfaceHolder arg0) {

// TODO Auto-generated method stub

if (mCamera != null) {

mCamera.stopPreview();

mSurfaceView = null;

mSurfaceHolder = null;

}

}

@Override

public void surfaceCreated(SurfaceHolder arg0) {

// TODO Auto-generated method stub

try {

if (mCamera != null) {

mCamera.setPreviewDisplay(arg0);

mSurfaceHolder = arg0;

}

} catch (IOException exception) {

Log.e(TAG, "Error setting up preview display", exception);

}

}

@Override

public void surfaceChanged(SurfaceHolder arg0, int arg1, int arg2,

int arg3) {

// TODO Auto-generated method stub

if (mCamera == null)

return;

//设置参数

Camera.Parameters parameters = mCamera.getParameters();

parameters.setPreviewSize(640, 480);

parameters.setPictureSize(640, 480);

mCamera.setParameters(parameters);

try {

mCamera.startPreview();

mSurfaceHolder = arg0;

} catch (Exception e) {

Log.e(TAG, "could not start preview", e);

mCamera.release();

mCamera = null;

}

}

});

}

SurfaceHolder holder = mSurfaceView.getHolder();

holder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

holder.addCallback(new SurfaceHolder.Callback() {

@Override

public void surfaceDestroyed(SurfaceHolder arg0) {

// TODO Auto-generated method stub

if (mCamera != null) {

mCamera.stopPreview();

mSurfaceView = null;

mSurfaceHolder = null;

}

}

@Override

public void surfaceCreated(SurfaceHolder arg0) {

// TODO Auto-generated method stub

try {

if (mCamera != null) {

mCamera.setPreviewDisplay(arg0);

mSurfaceHolder = arg0;

}

} catch (IOException exception) {

Log.e(TAG, "Error setting up preview display", exception);

}

}

@Override

public void surfaceChanged(SurfaceHolder arg0, int arg1, int arg2,

int arg3) {

// TODO Auto-generated method stub

if (mCamera == null)

return;

//设置参数

Camera.Parameters parameters = mCamera.getParameters();

parameters.setPreviewSize(640, 480);

parameters.setPictureSize(640, 480);

mCamera.setParameters(parameters);

try {

mCamera.startPreview();

mSurfaceHolder = arg0;

} catch (Exception e) {

Log.e(TAG, "could not start preview", e);

mCamera.release();

mCamera = null;

}

}

});

}

private class EventHandler extends Handler

{

private Camera mCamera;

public EventHandler(Camera c, Looper looper) {

super(looper);

mCamera = c;

}

@Override

public void handleMessage(Message msg) {

switch(msg.what) {

case CAMERA_MSG_SHUTTER:

if (mShutterCallback != null) {

mShutterCallback.onShutter();

}

return;

case CAMERA_MSG_RAW_IMAGE:

if (mRawImageCallback != null) {

mRawImageCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_COMPRESSED_IMAGE:

if (mJpegCallback != null) {

mJpegCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_FRAME:

PreviewCallback pCb = mPreviewCallback;

if (pCb != null) {

if (mOneShot) {

// Clear the callback variable before the callback

// in case the app calls setPreviewCallback from

// the callback function

mPreviewCallback = null;

} else if (!mWithBuffer) {

// We're faking the camera preview mode to prevent

// the app from being flooded with preview frames.

// Set to oneshot mode again.

setHasPreviewCallback(true, false);

}

pCb.onPreviewFrame((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_POSTVIEW_FRAME:

if (mPostviewCallback != null) {

mPostviewCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_FOCUS:

AutoFocusCallback cb = null;

synchronized (mAutoFocusCallbackLock) {

cb = mAutoFocusCallback;

}

if (cb != null) {

boolean success = msg.arg1 == 0 ? false : true;

cb.onAutoFocus(success, mCamera);

}

return;

case CAMERA_MSG_ZOOM:

if (mZoomListener != null) {

mZoomListener.onZoomChange(msg.arg1, msg.arg2 != 0, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_METADATA:

if (mFaceListener != null) {

mFaceListener.onFaceDetection((Face[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_ERROR :

Log.e(TAG, "Error " + msg.arg1);

if (mErrorCallback != null) {

mErrorCallback.onError(msg.arg1, mCamera);

}

return;

case CAMERA_MSG_FOCUS_MOVE:

if (mAutoFocusMoveCallback != null) {

mAutoFocusMoveCallback.onAutoFocusMoving(msg.arg1 == 0 ? false : true, mCamera);

}

return;

default:

Log.e(TAG, "Unknown message type " + msg.what);

return;

}

}

}

private class EventHandler extends Handler

{

private Camera mCamera;

public EventHandler(Camera c, Looper looper) {

super(looper);

mCamera = c;

}

@Override

public void handleMessage(Message msg) {

switch(msg.what) {

case CAMERA_MSG_SHUTTER:

if (mShutterCallback != null) {

mShutterCallback.onShutter();

}

return;

case CAMERA_MSG_RAW_IMAGE:

if (mRawImageCallback != null) {

mRawImageCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_COMPRESSED_IMAGE:

if (mJpegCallback != null) {

mJpegCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_FRAME:

PreviewCallback pCb = mPreviewCallback;

if (pCb != null) {

if (mOneShot) {

// Clear the callback variable before the callback

// in case the app calls setPreviewCallback from

// the callback function

mPreviewCallback = null;

} else if (!mWithBuffer) {

// We're faking the camera preview mode to prevent

// the app from being flooded with preview frames.

// Set to oneshot mode again.

setHasPreviewCallback(true, false);

}

pCb.onPreviewFrame((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_POSTVIEW_FRAME:

if (mPostviewCallback != null) {

mPostviewCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_FOCUS:

AutoFocusCallback cb = null;

synchronized (mAutoFocusCallbackLock) {

cb = mAutoFocusCallback;

}

if (cb != null) {

boolean success = msg.arg1 == 0 ? false : true;

cb.onAutoFocus(success, mCamera);

}

return;

case CAMERA_MSG_ZOOM:

if (mZoomListener != null) {

mZoomListener.onZoomChange(msg.arg1, msg.arg2 != 0, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_METADATA:

if (mFaceListener != null) {

mFaceListener.onFaceDetection((Face[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_ERROR :

Log.e(TAG, "Error " + msg.arg1);

if (mErrorCallback != null) {

mErrorCallback.onError(msg.arg1, mCamera);

}

return;

case CAMERA_MSG_FOCUS_MOVE:

if (mAutoFocusMoveCallback != null) {

mAutoFocusMoveCallback.onAutoFocusMoving(msg.arg1 == 0 ? false : true, mCamera);

}

return;

default:

Log.e(TAG, "Unknown message type " + msg.what);

return;

}

}

}

case CAMERA_MSG_COMPRESSED_IMAGE:

if (mJpegCallback != null) {

mJpegCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_COMPRESSED_IMAGE:

if (mJpegCallback != null) {

mJpegCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

public interface PreviewCallback

{

/**

* Called as preview frames are displayed. This callback is invoked

* on the event thread {@link

*

* <p>If using the {@link android.graphics.ImageFormat

* refer to the equations in {@link Camera.Parameters

* for the arrangement of the pixel data in the preview callback

* buffers.

*

* @param data the contents of the preview frame in the format defined

* by {@link android.graphics.ImageFormat}, which can be queried

* with {@link android.hardware.Camera.Parameters

* If {@link android.hardware.Camera.Parameters

* is never called, the default will be the YCbCr_420_SP

* (NV21) format.

* @param camera the Camera service object.

*/

void onPreviewFrame(byte[] data, Camera camera);

};

public interface PreviewCallback

{

/**

* Called as preview frames are displayed. This callback is invoked

* on the event thread {@link

*

* <p>If using the {@link android.graphics.ImageFormat

* refer to the equations in {@link Camera.Parameters

* for the arrangement of the pixel data in the preview callback

* buffers.

*

* @param data the contents of the preview frame in the format defined

* by {@link android.graphics.ImageFormat}, which can be queried

* with {@link android.hardware.Camera.Parameters

* If {@link android.hardware.Camera.Parameters

* is never called, the default will be the YCbCr_420_SP

* (NV21) format.

* @param camera the Camera service object.

*/

void onPreviewFrame(byte[] data, Camera camera);

};

public native final void setPreviewTexture(SurfaceTexture surfaceTexture) throws IOException;

private native final void setPreviewDisplay(Surface surface) throws IOException;

public native final void setPreviewTexture(SurfaceTexture surfaceTexture) throws IOException;

private native final void setPreviewDisplay(Surface surface) throws IOException;

import android.graphics.ImageFormat;

import android.graphics.Rect;

import android.graphics.YuvImage;

import android.media.Image;

import android.text.Spanned;

import android.util.Log;

import com.android.internal.logging.nano.MetricsProto;

import java.io.ByteArrayOutputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.nio.ByteBuffer;

public class ImageUtil {

public static final int COLOR_FormatI420 = 1;

public static final int COLOR_FormatNV21 = 2;

public static final int NV21 = 2;

private static final String TAG = "ImageUtil";

public static final int YUV420P = 0;

public static final int YUV420SP = 1;

private static boolean isImageFormatSupported(Image image) {

int format = image.getFormat();

if (format == 17 || format == 35 || format == 842094169) {

return true;

}

return false;

}

public static void dumpFile(String str, byte[] bArr) {

try {

FileOutputStream fileOutputStream = new FileOutputStream(str);

try {

fileOutputStream.write(bArr);

fileOutputStream.close();

} catch (IOException e) {

throw new RuntimeException("failed writing data to file " + str, e);

}

} catch (IOException e2) {

throw new RuntimeException("Unable to create output file " + str, e2);

}

}

public static void compressToJpeg(String str, Image image) {

try {

FileOutputStream fileOutputStream = new FileOutputStream(str);

Rect cropRect = image.getCropRect();

new YuvImage(getDataFromImage(image, 2), 17, cropRect.width(), cropRect.height(), (int[]) null).compressToJpeg(cropRect, 100, fileOutputStream);

} catch (IOException e) {

throw new RuntimeException("Unable to create output file " + str, e);

}

}

public static byte[] yuvToCompressedJPEG(byte[] bArr, Rect rect) {

ByteArrayOutputStream byteArrayOutputStream = new ByteArrayOutputStream();

new YuvImage(bArr, 17, rect.width(), rect.height(), (int[]) null).compressToJpeg(rect, 100, byteArrayOutputStream);

return byteArrayOutputStream.toByteArray();

}

public static byte[] getDataFromImage(Image image, int i) {

Rect rect;

int i2;

int i3 = i;

int i4 = 2;

int i5 = 1;

if (i3 != 1 && i3 != 2) {

throw new IllegalArgumentException("only support COLOR_FormatI420 and COLOR_FormatNV21");

} else if (isImageFormatSupported(image)) {

Rect cropRect = image.getCropRect();

int format = image.getFormat();

int width = cropRect.width();

int height = cropRect.height();

Image.Plane[] planes = image.getPlanes();

int i6 = width * height;

byte[] bArr = new byte[((ImageFormat.getBitsPerPixel(format) * i6) / 8)];

int i7 = 0;

byte[] bArr2 = new byte[planes[0].getRowStride()];

int i8 = 1;

int i9 = 0;

int i10 = 0;

while (i9 < planes.length) {

switch (i9) {

case 0:

i8 = i5;

i10 = i7;

break;

case 1:

if (i3 != i5) {

if (i3 == i4) {

i10 = i6 + 1;

i8 = i4;

break;

}

} else {

i8 = i5;

i10 = i6;

break;

}

break;

case 2:

if (i3 != i5) {

if (i3 == i4) {

i8 = i4;

i10 = i6;

break;

}

} else {

i10 = (int) (((double) i6) * 1.25d);

i8 = i5;

break;

}

break;

}

ByteBuffer buffer = planes[i9].getBuffer();

int rowStride = planes[i9].getRowStride();

int pixelStride = planes[i9].getPixelStride();

int i11 = i9 == 0 ? i7 : i5;

int i12 = width >> i11;

int i13 = height >> i11;

int i14 = width;

buffer.position(((cropRect.top >> i11) * rowStride) + ((cropRect.left >> i11) * pixelStride));

int i15 = 0;

while (i15 < i13) {

if (pixelStride == 1 && i8 == 1) {

buffer.get(bArr, i10, i12);

i10 += i12;

rect = cropRect;

i2 = i12;

} else {

i2 = ((i12 - 1) * pixelStride) + 1;

rect = cropRect;

buffer.get(bArr2, 0, i2);

int i16 = i10;

for (int i17 = 0; i17 < i12; i17++) {

bArr[i16] = bArr2[i17 * pixelStride];

i16 += i8;

}

i10 = i16;

}

if (i15 < i13 - 1) {

buffer.position((buffer.position() + rowStride) - i2);

}

i15++;

cropRect = rect;

}

Log.v(TAG, "Finished reading data from plane " + i9);

i9++;

i5 = 1;

width = i14;

i3 = i;

i4 = 2;

i7 = 0;

}

return bArr;

} else {

throw new RuntimeException("can't convert Image to byte array, format " + image.getFormat());

}

}

public static byte[] getBytesFromImageAsType(Image image, int i) {

Image.Plane[] planeArr;

Image.Plane[] planeArr2;

try {

Image.Plane[] planes = image.getPlanes();

int width = image.getWidth();

int height = image.getHeight();

int i2 = width * height;

byte[] bArr = new byte[((ImageFormat.getBitsPerPixel(35) * i2) / 8)];

byte[] bArr2 = new byte[(i2 / 4)];

byte[] bArr3 = new byte[(i2 / 4)];

int i3 = 0;

int i4 = 0;

int i5 = 0;

int i6 = 0;

while (i3 < planes.length) {

int pixelStride = planes[i3].getPixelStride();

int rowStride = planes[i3].getRowStride();

ByteBuffer buffer = planes[i3].getBuffer();

byte[] bArr4 = new byte[buffer.capacity()];

buffer.get(bArr4);

if (i3 == 0) {

int i7 = i4;

int i8 = 0;

for (int i9 = 0; i9 < height; i9++) {

System.arraycopy(bArr4, i8, bArr, i7, width);

i8 += rowStride;

i7 += width;

}

planeArr = planes;

i4 = i7;

} else if (i3 == 1) {

int i10 = i5;

int i11 = 0;

for (int i12 = 0; i12 < height / 2; i12++) {

int i13 = 0;

while (i13 < width / 2) {

bArr2[i10] = bArr4[i11];

i11 += pixelStride;

i13++;

i10++;

}

if (pixelStride == 2) {

i11 += rowStride - width;

} else if (pixelStride == 1) {

i11 += rowStride - (width / 2);

}

}

planeArr = planes;

i5 = i10;

} else if (i3 == 2) {

int i14 = i6;

int i15 = 0;

int i16 = 0;

while (i15 < height / 2) {

int i17 = i16;

int i18 = 0;

while (true) {

planeArr2 = planes;

if (i18 >= width / 2) {

break;

}

bArr3[i14] = bArr4[i17];

i17 += pixelStride;

i18++;

i14++;

planes = planeArr2;

}

if (pixelStride == 2) {

i17 += rowStride - width;

} else if (pixelStride == 1) {

i17 += rowStride - (width / 2);

}

i15++;

i16 = i17;

planes = planeArr2;

}

planeArr = planes;

i6 = i14;

} else {

planeArr = planes;

}

i3++;

planes = planeArr;

}

switch (i) {

case 0:

System.arraycopy(bArr2, 0, bArr, i4, bArr2.length);

System.arraycopy(bArr3, 0, bArr, i4 + bArr2.length, bArr3.length);

break;

case 1:

for (int i19 = 0; i19 < bArr3.length; i19++) {

int i20 = i4 + 1;

bArr[i4] = bArr2[i19];

i4 = i20 + 1;

bArr[i20] = bArr3[i19];

}

break;

case 2:

for (int i21 = 0; i21 < bArr3.length; i21++) {

int i22 = i4 + 1;

bArr[i4] = bArr3[i21];

i4 = i22 + 1;

bArr[i22] = bArr2[i21];

}

break;

}

return bArr;

} catch (Exception e) {

if (image == null) {

return null;

}

image.close();

return null;

}

}

public static int[] decodeYUV420SP(byte[] bArr, int i, int i2) {

int i3 = i;

int i4 = i2;

int i5 = i3 * i;

int[] iArr = new int[i5];

int i6 = 0;

int i7 = 0;

while (i6 < i4) {

int i8 = ((i6 >> 1) * i3) + i5;

int i9 = 0;

int i10 = 0;

int i11 = i7;

int i12 = 0;

while (i12 < i3) {

int i13 = (bArr[i11] & 255) - 16;

if (i13 < 0) {

i13 = 0;

}

if ((i12 & 1) == 0) {

int i14 = i8 + 1;

int i15 = i14 + 1;

int i16 = (bArr[i14] & 255) - 128;

i9 = (bArr[i8] & 255) - 128;

i8 = i15;

i10 = i16;

}

int i17 = 1192 * i13;

int i18 = (1634 * i9) + i17;

int i19 = (i17 - (MetricsProto.MetricsEvent.FIELD_CONTEXT * i9)) - (400 * i10);

int i20 = i17 + (2066 * i10);

if (i18 < 0) {

i18 = 0;

} else if (i18 > 262143) {

i18 = 262143;

}

if (i19 < 0) {

i19 = 0;

} else if (i19 > 262143) {

i19 = 262143;

}

if (i20 < 0) {

i20 = 0;

} else if (i20 > 262143) {

i20 = 262143;

}

iArr[i11] = -16777216 | ((i18 << 6) & Spanned.SPAN_PRIORITY) | ((i19 >> 2) & 65280) | ((i20 >> 10) & 255);

i11++;

}

i6++;

i7 = i11;

}

return iArr;

}

}

import android.graphics.ImageFormat;

import android.graphics.Rect;

import android.graphics.YuvImage;

import android.media.Image;

import android.text.Spanned;

import android.util.Log;

import com.android.internal.logging.nano.MetricsProto;

import java.io.ByteArrayOutputStream;

import java.io.FileOutputStream;

import java.io.IOException;

import java.nio.ByteBuffer;

public class ImageUtil {

public static final int COLOR_FormatI420 = 1;

public static final int COLOR_FormatNV21 = 2;

public static final int NV21 = 2;

private static final String TAG = "ImageUtil";

public static final int YUV420P = 0;

public static final int YUV420SP = 1;

private static boolean isImageFormatSupported(Image image) {

int format = image.getFormat();

if (format == 17 || format == 35 || format == 842094169) {

return true;

}

return false;

}

public static void dumpFile(String str, byte[] bArr) {

try {

FileOutputStream fileOutputStream = new FileOutputStream(str);

try {

fileOutputStream.write(bArr);

fileOutputStream.close();

} catch (IOException e) {

throw new RuntimeException("failed writing data to file " + str, e);

}

} catch (IOException e2) {

throw new RuntimeException("Unable to create output file " + str, e2);

}

}

public static void compressToJpeg(String str, Image image) {

try {

FileOutputStream fileOutputStream = new FileOutputStream(str);

Rect cropRect = image.getCropRect();

new YuvImage(getDataFromImage(image, 2), 17, cropRect.width(), cropRect.height(), (int[]) null).compressToJpeg(cropRect, 100, fileOutputStream);

} catch (IOException e) {

throw new RuntimeException("Unable to create output file " + str, e);

}

}

public static byte[] yuvToCompressedJPEG(byte[] bArr, Rect rect) {

ByteArrayOutputStream byteArrayOutputStream = new ByteArrayOutputStream();

new YuvImage(bArr, 17, rect.width(), rect.height(), (int[]) null).compressToJpeg(rect, 100, byteArrayOutputStream);

return byteArrayOutputStream.toByteArray();

}

public static byte[] getDataFromImage(Image image, int i) {

Rect rect;

int i2;

int i3 = i;

int i4 = 2;

int i5 = 1;

if (i3 != 1 && i3 != 2) {

throw new IllegalArgumentException("only support COLOR_FormatI420 and COLOR_FormatNV21");

} else if (isImageFormatSupported(image)) {

Rect cropRect = image.getCropRect();

int format = image.getFormat();

int width = cropRect.width();

int height = cropRect.height();

Image.Plane[] planes = image.getPlanes();

int i6 = width * height;

byte[] bArr = new byte[((ImageFormat.getBitsPerPixel(format) * i6) / 8)];

int i7 = 0;

byte[] bArr2 = new byte[planes[0].getRowStride()];

int i8 = 1;

int i9 = 0;

int i10 = 0;

while (i9 < planes.length) {

switch (i9) {

case 0:

i8 = i5;

i10 = i7;

break;

case 1:

if (i3 != i5) {

if (i3 == i4) {

i10 = i6 + 1;

[招生]科锐逆向工程师培训(2024年11月15日实地,远程教学同时开班, 第51期)