-

-

[原创]某下载软件asar加密算法

-

发表于: 2024-10-16 17:57 6843

-

Asar is a simple extensive archive format, it works like tar that concatenates all files together without compression, while having random access support.

某下载软件前端也是用的electron开发,但是是加密的。直接上代码:

根据代码看,加密方式还是比较简单的,一个rsa, -个aes-128-ecb且都是标准算法。解密的过程其实在某个dll里,有兴趣的可以找找在哪个dll,找到了会有惊喜。

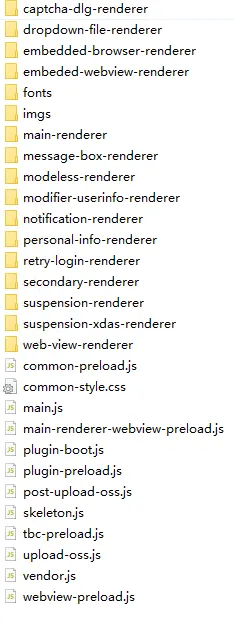

解密后,提取出的代码如下:

解密后就能对代码进行修改,增加自己需要的功能,然后重新打包。反正就是有了源码几乎可以随便折腾。

本帖所有代码只用于技术交流,请勿用于其他目的。

大佬们有项目需要找人合作的私我,我这边可以提供ios, win, android 软件分析定制服务(有团队),一起发财啊

'use strict';(function () {

const asar = process._linkedBinding('atom_common_asar')

const v8Util = process._linkedBinding('atom_common_v8_util')

const { Buffer } = require('buffer')

const Module = require('module')

const path = require('path')

const util = require('util')

//###################################

const crypto = require('crypto')

//###################################

const Promise = global.Promise

const envNoAsar =

process.env.ELECTRON_NO_ASAR &&

process.type !== 'browser' &&

process.type !== 'renderer'

const isAsarDisabled = () => process.noAsar || envNoAsar

const internalBinding = process.internalBinding

delete process.internalBinding

/**

* @param {!Function} functionToCall

* @param {!Array|undefined} args

*/

const nextTick = (functionToCall, args = []) => {

process.nextTick(() => functionToCall(...args))

}

// Cache asar archive objects.

const cachedArchives = new Map()

const cachedArchiveKeys = {}

const isPrime = (n) => {

if (n < 2) return false

if (n == 2) return true

if (n % 2 == 0) return false

let foo = n / 2

for (let i = 3; i <= foo; i += 2) if (n % i == 0) return false

return true

}

// decrypt header infomation

const decryptHeader = (data) => {

let pubKey = ''

pubKey +=

[

'-',

'-',

'-',

'-',

'-',

'B',

'E',

'G',

'I',

'N',

' ',

'P',

'U',

'B',

'L',

'I',

'C',

' ',

'K',

'E',

'Y',

'-',

'-',

'-',

'-',

'-',

].join('') + '\r\n'

let num = 31

let keylist = []

for (let i = 0; i < 16; num++) {

if (isPrime(num)) {

keylist.push(num)

i++

}

}

let key = Buffer.from(keylist)

let list = [

110, 170, 13, 242, 151, 96, 97, 176, 68, 151, 34, 31, 98, 40, 95, 251,

176, 35, 121, 231, 91, 99, 152, 196, 95, 67, 111, 216, 41, 35, 219, 26,

237, 60, 239, 178, 61, 243, 248, 190, 166, 169, 22, 171, 45, 3, 96, 7, 73,

211, 152, 91, 2, 213, 65, 161, 84, 233, 248, 94, 248, 184, 178, 202, 18,

234, 38, 140, 32, 100, 226, 56, 201, 152, 103, 187, 223, 213, 9, 196, 222,

254, 108, 77, 50, 235, 19, 219, 188, 246, 28, 231, 175, 179, 151, 159,

123, 13, 243, 206, 171, 84, 170, 125, 138, 88, 246, 156, 131, 246, 197,

236, 78, 163, 89, 104, 17, 28, 106, 18, 64, 193, 33, 227, 90, 177, 110,

19, 27, 37, 241, 170, 234, 1, 158, 5, 182, 136, 86, 179, 8, 106, 194, 120,

33, 46, 152, 172, 76, 12, 137, 78, 149, 234, 90, 175, 163, 116, 151, 79,

246, 115, 39, 123, 207, 185, 51, 172, 103, 128, 67, 125, 127, 166, 177,

150, 33, 144, 147, 27, 78, 53, 0, 103, 140, 102, 73, 207, 72, 121, 237,

117, 52, 5, 254, 217, 131, 77, 178, 67, 141, 37, 98, 141, 83, 236, 169, 7,

208, 214, 218, 222, 227, 197, 227, 193, 117, 65, 242, 156, 172, 21, 191,

29, 236, 218, 75, 43, 23, 56, 54, 40, 25, 0, 184, 183, 213, 173, 97, 115,

242, 72, 18, 53, 252, 8, 113, 100, 17, 142, 20, 214, 176, 162, 249, 182,

232, 154, 32, 224, 62, 217, 44, 170, 179, 127, 89, 114, 179, 106, 120, 79,

22, 65, 67, 198, 164, 3, 111, 206, 172, 150, 213, 150, 254, 135, 140, 168,

115, 235, 187, 133, 209, 130, 198, 73, 148, 118, 68, 81, 190, 166, 102,

178, 231, 177, 63, 177, 44, 35, 164, 182, 226, 222, 199, 42, 15, 153, 164,

190, 90, 19, 18, 3, 241, 12, 57, 121, 33, 82, 253, 178, 184, 197, 125,

212, 232, 188, 114, 139, 136, 25, 71, 196, 129, 148, 163, 56, 184, 133,

30, 83, 99, 58, 160, 62, 81, 50, 175, 199, 203, 243, 99, 7, 218, 156, 219,

228, 33, 69, 45, 133, 124, 160, 228, 89, 252, 68, 91, 178, 4, 28, 208, 15,

]

for (let i = 0; i < 6; i++) {

var cipherChunks = []

var decipher = crypto.createDecipheriv(

'aes-128-ecb',

key,

Buffer.from([])

)

decipher.setAutoPadding(false)

cipherChunks.push(

decipher.update(Buffer.from(list.slice(i * 64, (i + 1) * 64)))

)

cipherChunks.push(decipher.final())

let res = Buffer.concat(cipherChunks)

pubKey += res.toString() + '\r\n'

}

pubKey += ['q', 'Q', 'I', 'D', 'A', 'Q', 'A', 'B'].join('') + '\r\n'

pubKey +=

[

'-',

'-',

'-',

'-',

'-',

'E',

'N',

'D',

' ',

'P',

'U',

'B',

'L',

'I',

'C',

' ',

'K',

'E',

'Y',

'-',

'-',

'-',

'-',

'-',

].join('') + '\r\n'

let dec_by_pub = crypto.publicDecrypt(pubKey, data)

return dec_by_pub

}

const getOrCreateArchive = (archivePath) => {

const isCached = cachedArchives.has(archivePath)

if (isCached) {

return cachedArchives.get(archivePath)

}

const newArchive = asar.createArchive(archivePath)

if (!newArchive) return null

cachedArchives.set(archivePath, newArchive)

return newArchive

}

const CAPACITY_READ_ONLY = 9007199254740992 // Largest JS number.

// sizeof(T).

const SIZE_UINT32 = 4

const SIZE_INT32 = 4

// Aligns 'i' by rounding it up to the next multiple of 'alignment'.

const alignInt = function (i, alignment) {

return i + ((alignment - (i % alignment)) % alignment)

}

const PickleIterator = (function () {

function PickleIterator(pickle) {

this.payload = pickle.header

this.payloadOffset = pickle.headerSize

this.readIndex = 0

this.endIndex = pickle.getPayloadSize()

}

PickleIterator.prototype.readUInt32 = function () {

return this.readBytes(SIZE_UINT32, Buffer.prototype.readUInt32LE)

}

PickleIterator.prototype.readInt = function () {

return this.readBytes(SIZE_INT32, Buffer.prototype.readInt32LE)

}

PickleIterator.prototype.readString = function () {

return this.readBytes(this.readInt()).toString()

}

PickleIterator.prototype.readBytes = function (length, method) {

var readPayloadOffset = this.getReadPayloadOffsetAndAdvance(length)

if (method != null) {

return method.call(this.payload, readPayloadOffset, length)

} else {

return this.payload.slice(readPayloadOffset, readPayloadOffset + length)

}

}

PickleIterator.prototype.getReadPayloadOffsetAndAdvance = function (

length

) {

if (length > this.endIndex - this.readIndex) {

this.readIndex = this.endIndex

throw new Error('Failed to read data with length of ' + length)

}

var readPayloadOffset = this.payloadOffset + this.readIndex

this.advance(length)

return readPayloadOffset

}

PickleIterator.prototype.advance = function (size) {

var alignedSize = alignInt(size, SIZE_UINT32)

if (this.endIndex - this.readIndex < alignedSize) {

this.readIndex = this.endIndex

} else {

this.readIndex += alignedSize

}

}

return PickleIterator

})()

// implement a tiny pickle for chromium-pickle-js to read asar header

const tinyPickle = (function () {

function tinyPickle(buffer) {

this.header = buffer

this.headerSize = buffer.length - this.getPayloadSize()

this.capacityAfterHeader = CAPACITY_READ_ONLY

this.writeOffset = 0

if (this.headerSize > buffer.length) {

this.headerSize = 0

}

if (this.headerSize !== alignInt(this.headerSize, SIZE_UINT32)) {

this.headerSize = 0

}

if (this.headerSize === 0) {

this.header = Buffer.alloc(0)

}

}

tinyPickle.prototype.getPayloadSize = function () {

return this.header.readUInt32LE(0)

}

tinyPickle.prototype.createIterator = function () {

return new PickleIterator(this)

}

return tinyPickle

})()

const getOrReadArchiveHeader = (archivePath, fs) => {

if (cachedArchiveKeys[archivePath] !== undefined) {

return cachedArchiveKeys[archivePath]

}

// if (!archivePath.includes('default_app.asar')) {

const fd = cachedArchives.get(archivePath).getFd()

let buffer = Buffer.alloc(8) // for default header size

// prama: handle, buffer, ?, size, offset

fs.readSync(fd, buffer, 0, 8, 0)

const sizePickle = new tinyPickle(buffer)

let nRsaCipherLength = sizePickle.createIterator().readUInt32()

// console.log('### nRsaCipherLength:', nRsaCipherLength);

buffer = Buffer.alloc(nRsaCipherLength)

fs.readSync(fd, buffer, 0, nRsaCipherLength, 8)

// UINT32|string|string

// const rsaHeaderPickle = new tinyPickle(buffer);

// const rsa_encrypted_str = rsaHeaderPickle.createIterator().readString();

// let headerStr = Buffer.toString(); // read header str

let rsaPlaintext = decryptHeader(buffer)

// console.log('### rsaPlaintext length:', rsaPlaintext.length);

let headerPicklePickle = new tinyPickle(rsaPlaintext).createIterator()

let nAesCipherLength = headerPicklePickle.readUInt32()

let headermd5 = headerPicklePickle.readString()

// decrypt header

let aeskey = headerPicklePickle.readString()

// console.log('### rsaPlaintext, nAesCipherLength: ', nAesCipherLength, ' md5:', headermd5, ' aeskey:', aeskey);

let data = Buffer.alloc(nAesCipherLength)

fs.readSync(fd, data, 0, nAesCipherLength, 8 + nRsaCipherLength)

var cipherChunks = []

var decipher = crypto.createDecipheriv(

'aes-128-ecb',

aeskey,

Buffer.from([])

)

decipher.setAutoPadding(false)

cipherChunks.push(decipher.update(data, undefined, 'utf8'))

cipherChunks.push(decipher.final('utf8'))

let header = JSON.parse(cipherChunks.join(''))

// verify header md5

let filesStr = JSON.stringify(header.files)

// console.log('### aes decrypted: ', filesStr);

const hash = crypto.createHash('md5')

hash.update(data)

let filesMd5 = hash.digest('hex')

if (headermd5 !== filesMd5) {

// console.warn('### headermd5: ', headermd5, ' filesmd5: ', filesMd5);

throw createError(AsarError.INVALID_ARCHIVE, { archivePath, archivePath })

}

cachedArchiveKeys[archivePath] = aeskey

return aeskey

// }

// return '';

}

// Separate asar package's path from full path.

const splitPath = (archivePathOrBuffer) => {

// Shortcut for disabled asar.

if (isAsarDisabled()) return { isAsar: false }

// Check for a bad argument type.

let archivePath = archivePathOrBuffer

if (Buffer.isBuffer(archivePathOrBuffer)) {

archivePath = archivePathOrBuffer.toString()

}

if (typeof archivePath !== 'string') return { isAsar: false }

return asar.splitPath(path.normalize(archivePath))

}

// Convert asar archive's Stats object to fs's Stats object.

let nextInode = 0

const uid = process.getuid != null ? process.getuid() : 0

const gid = process.getgid != null ? process.getgid() : 0

const fakeTime = new Date()

const msec = (date) => (date || fakeTime).getTime()

const asarStatsToFsStats = function (stats) {

const { Stats, constants } = require('fs')

let mode =

constants.S_IROTH ^

constants.S_IRGRP ^

constants.S_IRUSR ^

constants.S_IWUSR

if (stats.isFile) {

mode ^= constants.S_IFREG

} else if (stats.isDirectory) {

mode ^= constants.S_IFDIR

} else if (stats.isLink) {

mode ^= constants.S_IFLNK

}

return new Stats(

1, // dev

mode, // mode

1, // nlink

uid,

gid,

0, // rdev

undefined, // blksize

++nextInode, // ino

stats.size,

undefined, // blocks,

msec(stats.atime), // atim_msec

msec(stats.mtime), // mtim_msec

msec(stats.ctime), // ctim_msec

msec(stats.birthtime) // birthtim_msec

)

}

const AsarError = {

NOT_FOUND: 'NOT_FOUND',

NOT_DIR: 'NOT_DIR',

NO_ACCESS: 'NO_ACCESS',

INVALID_ARCHIVE: 'INVALID_ARCHIVE',

}

const createError = (errorType, { asarPath, filePath } = {}) => {

let error

switch (errorType) {

case AsarError.NOT_FOUND:

error = new Error(`ENOENT, ${filePath} not found in ${asarPath}`)

error.code = 'ENOENT'

error.errno = -2

break

case AsarError.NOT_DIR:

error = new Error('ENOTDIR, not a directory')

error.code = 'ENOTDIR'

error.errno = -20

break

case AsarError.NO_ACCESS:

error = new Error(`EACCES: permission denied, access '${filePath}'`)

error.code = 'EACCES'

error.errno = -13

break

case AsarError.INVALID_ARCHIVE:

error = new Error(`Invalid package ${asarPath}`)

break

default:

throw new Error(

`Invalid error type "${errorType}" passed to createError.`

)

}

return error

}

const overrideAPISync = function (

module,

name,

pathArgumentIndex,

fromAsync

) {

if (pathArgumentIndex == null) pathArgumentIndex = 0

const old = module[name]

const func = function () {

const pathArgument = arguments[pathArgumentIndex]

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return old.apply(this, arguments)

const archive = getOrCreateArchive(asarPath)

if (!archive) throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

const newPath = archive.copyFileOut(filePath)

if (!newPath)

throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

arguments[pathArgumentIndex] = newPath

return old.apply(this, arguments)

}

if (fromAsync) {

return func

}

module[name] = func

}

const overrideAPI = function (module, name, pathArgumentIndex) {

if (pathArgumentIndex == null) pathArgumentIndex = 0

const old = module[name]

module[name] = function () {

const pathArgument = arguments[pathArgumentIndex]

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return old.apply(this, arguments)

const callback = arguments[arguments.length - 1]

if (typeof callback !== 'function') {

return overrideAPISync(module, name, pathArgumentIndex, true).apply(

this,

arguments

)

}

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const newPath = archive.copyFileOut(filePath)

if (!newPath) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

arguments[pathArgumentIndex] = newPath

return old.apply(this, arguments)

}

if (old[util.promisify.custom]) {

module[name][util.promisify.custom] = makePromiseFunction(

old[util.promisify.custom],

pathArgumentIndex

)

}

if (module.promises && module.promises[name]) {

module.promises[name] = makePromiseFunction(

module.promises[name],

pathArgumentIndex

)

}

}

const makePromiseFunction = function (orig, pathArgumentIndex) {

return function (...args) {

const pathArgument = args[pathArgumentIndex]

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return orig.apply(this, args)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

return Promise.reject(

createError(AsarError.INVALID_ARCHIVE, { asarPath })

)

}

const newPath = archive.copyFileOut(filePath)

if (!newPath) {

return Promise.reject(

createError(AsarError.NOT_FOUND, { asarPath, filePath })

)

}

args[pathArgumentIndex] = newPath

return orig.apply(this, args)

}

}

// Override fs APIs.

exports.wrapFsWithAsar = (fs) => {

const logFDs = {}

const logASARAccess = (asarPath, filePath, offset) => {

if (!process.env.ELECTRON_LOG_ASAR_READS) return

if (!logFDs[asarPath]) {

const path = require('path')

const logFilename = `${path.basename(asarPath, '.asar')}-access-log.txt`

const logPath = path.join(require('os').tmpdir(), logFilename)

logFDs[asarPath] = fs.openSync(logPath, 'a')

}

fs.writeSync(logFDs[asarPath], `${offset}: ${filePath}\n`)

}

const { lstatSync } = fs

fs.lstatSync = (pathArgument, options) => {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return lstatSync(pathArgument, options)

const archive = getOrCreateArchive(asarPath)

if (!archive) throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

const stats = archive.stat(filePath)

if (!stats) throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

return asarStatsToFsStats(stats)

}

const { lstat } = fs

fs.lstat = function (pathArgument, options, callback) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (typeof options === 'function') {

callback = options

options = {}

}

if (!isAsar) return lstat(pathArgument, options, callback)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const stats = archive.stat(filePath)

if (!stats) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

const fsStats = asarStatsToFsStats(stats)

nextTick(callback, [null, fsStats])

}

fs.promises.lstat = util.promisify(fs.lstat)

const { statSync } = fs

fs.statSync = (pathArgument, options) => {

const { isAsar } = splitPath(pathArgument)

if (!isAsar) return statSync(pathArgument, options)

// Do not distinguish links for now.

return fs.lstatSync(pathArgument, options)

}

const { stat } = fs

fs.stat = (pathArgument, options, callback) => {

const { isAsar } = splitPath(pathArgument)

if (typeof options === 'function') {

callback = options

options = {}

}

if (!isAsar) return stat(pathArgument, options, callback)

// Do not distinguish links for now.

process.nextTick(() => fs.lstat(pathArgument, options, callback))

}

fs.promises.stat = util.promisify(fs.stat)

const wrapRealpathSync = function (realpathSync) {

return function (pathArgument, options) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return realpathSync.apply(this, arguments)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

}

const fileRealPath = archive.realpath(filePath)

if (fileRealPath === false) {

throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

}

return path.join(realpathSync(asarPath, options), fileRealPath)

}

}

const { realpathSync } = fs

fs.realpathSync = wrapRealpathSync(realpathSync)

fs.realpathSync.native = wrapRealpathSync(realpathSync.native)

const wrapRealpath = function (realpath) {

return function (pathArgument, options, callback) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return realpath.apply(this, arguments)

if (arguments.length < 3) {

callback = options

options = {}

}

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const fileRealPath = archive.realpath(filePath)

if (fileRealPath === false) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

realpath(asarPath, options, (error, archiveRealPath) => {

if (error === null) {

const fullPath = path.join(archiveRealPath, fileRealPath)

callback(null, fullPath)

} else {

callback(error)

}

})

}

}

const { realpath } = fs

fs.realpath = wrapRealpath(realpath)

fs.realpath.native = wrapRealpath(realpath.native)

fs.promises.realpath = util.promisify(fs.realpath.native)

const { exists } = fs

fs.exists = (pathArgument, callback) => {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return exists(pathArgument, callback)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const pathExists = archive.stat(filePath) !== false

nextTick(callback, [pathExists])

}

fs.exists[util.promisify.custom] = (pathArgument) => {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return exists[util.promisify.custom](pathArgument)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

return Promise.reject(error)

}

return Promise.resolve(archive.stat(filePath) !== false)

}

const { existsSync } = fs

fs.existsSync = (pathArgument) => {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return existsSync(pathArgument)

const archive = getOrCreateArchive(asarPath)

if (!archive) return false

return archive.stat(filePath) !== false

}

const { access } = fs

fs.access = function (pathArgument, mode, callback) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return access.apply(this, arguments)

if (typeof mode === 'function') {

callback = mode

mode = fs.constants.F_OK

}

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const info = archive.getFileInfo(filePath)

if (!info) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

if (info.unpacked) {

const realPath = archive.copyFileOut(filePath)

return fs.access(realPath, mode, callback)

}

const stats = archive.stat(filePath)

if (!stats) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

if (mode & fs.constants.W_OK) {

const error = createError(AsarError.NO_ACCESS, { asarPath, filePath })

nextTick(callback, [error])

return

}

nextTick(callback)

}

fs.promises.access = util.promisify(fs.access)

const { accessSync } = fs

fs.accessSync = function (pathArgument, mode) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return accessSync.apply(this, arguments)

if (mode == null) mode = fs.constants.F_OK

const archive = getOrCreateArchive(asarPath)

if (!archive) {

throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

}

const info = archive.getFileInfo(filePath)

if (!info) {

throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

}

if (info.unpacked) {

const realPath = archive.copyFileOut(filePath)

return fs.accessSync(realPath, mode)

}

const stats = archive.stat(filePath)

if (!stats) {

throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

}

if (mode & fs.constants.W_OK) {

throw createError(AsarError.NO_ACCESS, { asarPath, filePath })

}

}

function decryption(data, asarPath) {

let key = getOrReadArchiveHeader(asarPath, fs)

// console.warn('#### begin decrypt, key: ', key);

if (!key) {

return data

}

if (typeof data === 'string') {

data = Buffer.from(data)

}

var cipherChunks = []

var decipher = crypto.createDecipheriv(

'aes-128-ecb',

key,

Buffer.from([])

)

decipher.setAutoPadding(false)

cipherChunks.push(decipher.update(data))

cipherChunks.push(decipher.final())

return Buffer.concat(cipherChunks)

}

const { readFile } = fs

fs.readFile = function (pathArgument, options, callback) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return readFile.apply(this, arguments)

if (typeof options === 'function') {

callback = options

options = { encoding: null }

} else if (typeof options === 'string') {

options = { encoding: options }

} else if (options === null || options === undefined) {

options = { encoding: null }

} else if (typeof options !== 'object') {

throw new TypeError('Bad arguments')

}

const { encoding } = options

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const info = archive.getFileInfo(filePath)

if (!info) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

if (info.size === 0) {

nextTick(callback, [null, encoding ? '' : Buffer.alloc(0)])

return

}

if (info.unpacked) {

const realPath = archive.copyFileOut(filePath)

return fs.readFile(realPath, options, callback)

}

const buffer = Buffer.alloc(info.size)

const fd = archive.getFd()

if (!(fd >= 0)) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

logASARAccess(asarPath, filePath, info.offset)

fs.read(fd, buffer, 0, info.size, info.offset, (error) => {

// #####################

// buffer

// console.warn('### asar.js: fs.read, path: ', filePath, ' size:', info.size, ' offset: ', info.offset);

let result = buffer

if (info.encrypted) {

result = decryption(result, asarPath)

// console.warn('### asar.js: fs.read', result.toString().slice(0, 10));

}

// #####################

callback(error, encoding ? result.toString(encoding) : result)

})

}

fs.promises.readFile = util.promisify(fs.readFile)

const { readFileSync } = fs

fs.readFileSync = function (pathArgument, options) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return readFileSync.apply(this, arguments)

const archive = getOrCreateArchive(asarPath)

if (!archive) throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

const info = archive.getFileInfo(filePath)

if (!info) throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

if (info.size === 0) return options ? '' : Buffer.alloc(0)

if (info.unpacked) {

const realPath = archive.copyFileOut(filePath)

return fs.readFileSync(realPath, options)

}

if (!options) {

options = { encoding: null }

} else if (typeof options === 'string') {

options = { encoding: options }

} else if (typeof options !== 'object') {

throw new TypeError('Bad arguments')

}

const { encoding } = options

const buffer = Buffer.alloc(info.size)

const fd = archive.getFd()

if (!(fd >= 0))

throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

logASARAccess(asarPath, filePath, info.offset)

fs.readSync(fd, buffer, 0, info.size, info.offset)

// #####################

// buffer

// console.warn('### asar.js: fs.readSync, path: ', filePath, ' size:', info.size, ' offset: ', info.offset);

let header = archive.path

let result = buffer

if (info.encrypted) {

result = decryption(buffer, asarPath)

// console.warn('### asar.js: fs.readSync result', result.toString().slice(0, 10));

}

// #####################

return encoding ? result.toString(encoding) : result

}

const { readdir } = fs

fs.readdir = function (pathArgument, options = {}, callback) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (typeof options === 'function') {

callback = options

options = {}

}

if (!isAsar) return readdir.apply(this, arguments)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const files = archive.readdir(filePath)

if (!files) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

if (options.withFileTypes) {

const dirents = []

for (const file of files) {

const stats = archive.stat(file)

if (stats.isFile) {

dirents.push(new fs.Dirent(file, fs.constants.UV_DIRENT_FILE))

} else if (stats.isDirectory) {

dirents.push(new fs.Dirent(file, fs.constants.UV_DIRENT_DIR))

} else if (stats.isLink) {

dirents.push(new fs.Dirent(file, fs.constants.UV_DIRENT_LINK))

}

}

nextTick(callback, [null, dirents])

return

}

nextTick(callback, [null, files])

}

fs.promises.readdir = util.promisify(fs.readdir)

const { readdirSync } = fs

fs.readdirSync = function (pathArgument, options = {}) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return readdirSync.apply(this, arguments)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

}

const files = archive.readdir(filePath)

if (!files) {

throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

}

if (options.withFileTypes) {

const dirents = []

for (const file of files) {

const stats = archive.stat(file)

if (stats.isFile) {

dirents.push(new fs.Dirent(file, fs.constants.UV_DIRENT_FILE))

} else if (stats.isDirectory) {

dirents.push(new fs.Dirent(file, fs.constants.UV_DIRENT_DIR))

} else if (stats.isLink) {

dirents.push(new fs.Dirent(file, fs.constants.UV_DIRENT_LINK))

}

}

return dirents

}

return files

}

const { internalModuleReadJSON } = internalBinding('fs')

internalBinding('fs').internalModuleReadJSON = (pathArgument) => {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return internalModuleReadJSON(pathArgument)

const archive = getOrCreateArchive(asarPath)

if (!archive) return

const info = archive.getFileInfo(filePath)

if (!info) return

if (info.size === 0) return ''

if (info.unpacked) {

const realPath = archive.copyFileOut(filePath)

return fs.readFileSync(realPath, { encoding: 'utf8' })

}

const buffer = Buffer.alloc(info.size)

const fd = archive.getFd()

if (!(fd >= 0)) return

logASARAccess(asarPath, filePath, info.offset)

fs.readSync(fd, buffer, 0, info.size, info.offset)

return buffer.toString('utf8')

}

const { internalModuleStat } = internalBinding('fs')

internalBinding('fs').internalModuleStat = (pathArgument) => {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return internalModuleStat(pathArgument)

// -ENOENT

const archive = getOrCreateArchive(asarPath)

if (!archive) return -34

// -ENOENT

const stats = archive.stat(filePath)

if (!stats) return -34

return stats.isDirectory ? 1 : 0

}

// Calling mkdir for directory inside asar archive should throw ENOTDIR

// error, but on Windows it throws ENOENT.

if (process.platform === 'win32') {

const { mkdir } = fs

fs.mkdir = (pathArgument, options, callback) => {

if (typeof options === 'function') {

callback = options

options = {}

}

const { isAsar, filePath } = splitPath(pathArgument)

if (isAsar && filePath.length > 0) {

const error = createError(AsarError.NOT_DIR)

nextTick(callback, [error])

return

}

mkdir(pathArgument, options, callback)

}

fs.promises.mkdir = util.promisify(fs.mkdir)

const { mkdirSync } = fs

fs.mkdirSync = function (pathArgument, options) {

const { isAsar, filePath } = splitPath(pathArgument)

if (isAsar && filePath.length) throw createError(AsarError.NOT_DIR)

return mkdirSync(pathArgument, options)

}

}

function invokeWithNoAsar(func) {

return function () {

const processNoAsarOriginalValue = process.noAsar

process.noAsar = true

try {

return func.apply(this, arguments)

} finally {

process.noAsar = processNoAsarOriginalValue

}

}

}

// Strictly implementing the flags of fs.copyFile is hard, just do a simple

// implementation for now. Doing 2 copies won't spend much time more as OS

// has filesystem caching.

overrideAPI(fs, 'copyFile')

overrideAPISync(fs, 'copyFileSync')

overrideAPI(fs, 'open')

overrideAPISync(process, 'dlopen', 1)

overrideAPISync(Module._extensions, '.node', 1)

overrideAPISync(fs, 'openSync')

const overrideChildProcess = (childProcess) => {

// Executing a command string containing a path to an asar archive

// confuses `childProcess.execFile`, which is internally called by

// `childProcess.{exec,execSync}`, causing Electron to consider the full

// command as a single path to an archive.

const { exec, execSync } = childProcess

childProcess.exec = invokeWithNoAsar(exec)

childProcess.exec[util.promisify.custom] = invokeWithNoAsar(

exec[util.promisify.custom]

)

childProcess.execSync = invokeWithNoAsar(execSync)

overrideAPI(childProcess, 'execFile')

overrideAPISync(childProcess, 'execFileSync')

}

// Lazily override the child_process APIs only when child_process is

// fetched the first time. We will eagerly override the child_process APIs

// when this env var is set so that stack traces generated inside node unit

// tests will match. This env var will only slow things down in users apps

// and should not be used.

if (process.env.ELECTRON_EAGER_ASAR_HOOK_FOR_TESTING) {

overrideChildProcess(require('child_process'))

} else {

const originalModuleLoad = Module._load

Module._load = (request, ...args) => {

const loadResult = originalModuleLoad(request, ...args)

if (request === 'child_process') {

if (!v8Util.getHiddenValue(loadResult, 'asar-ready')) {

v8Util.setHiddenValue(loadResult, 'asar-ready', true)

// Just to make it obvious what we are dealing with here

const childProcess = loadResult

overrideChildProcess(childProcess)

}

}

return loadResult

}

}

}

})()'use strict';(function () {

const asar = process._linkedBinding('atom_common_asar')

const v8Util = process._linkedBinding('atom_common_v8_util')

const { Buffer } = require('buffer')

const Module = require('module')

const path = require('path')

const util = require('util')

//###################################

const crypto = require('crypto')

//###################################

const Promise = global.Promise

const envNoAsar =

process.env.ELECTRON_NO_ASAR &&

process.type !== 'browser' &&

process.type !== 'renderer'

const isAsarDisabled = () => process.noAsar || envNoAsar

const internalBinding = process.internalBinding

delete process.internalBinding

/**

* @param {!Function} functionToCall

* @param {!Array|undefined} args

*/

const nextTick = (functionToCall, args = []) => {

process.nextTick(() => functionToCall(...args))

}

// Cache asar archive objects.

const cachedArchives = new Map()

const cachedArchiveKeys = {}

const isPrime = (n) => {

if (n < 2) return false

if (n == 2) return true

if (n % 2 == 0) return false

let foo = n / 2

for (let i = 3; i <= foo; i += 2) if (n % i == 0) return false

return true

}

// decrypt header infomation

const decryptHeader = (data) => {

let pubKey = ''

pubKey +=

[

'-',

'-',

'-',

'-',

'-',

'B',

'E',

'G',

'I',

'N',

' ',

'P',

'U',

'B',

'L',

'I',

'C',

' ',

'K',

'E',

'Y',

'-',

'-',

'-',

'-',

'-',

].join('') + '\r\n'

let num = 31

let keylist = []

for (let i = 0; i < 16; num++) {

if (isPrime(num)) {

keylist.push(num)

i++

}

}

let key = Buffer.from(keylist)

let list = [

110, 170, 13, 242, 151, 96, 97, 176, 68, 151, 34, 31, 98, 40, 95, 251,

176, 35, 121, 231, 91, 99, 152, 196, 95, 67, 111, 216, 41, 35, 219, 26,

237, 60, 239, 178, 61, 243, 248, 190, 166, 169, 22, 171, 45, 3, 96, 7, 73,

211, 152, 91, 2, 213, 65, 161, 84, 233, 248, 94, 248, 184, 178, 202, 18,

234, 38, 140, 32, 100, 226, 56, 201, 152, 103, 187, 223, 213, 9, 196, 222,

254, 108, 77, 50, 235, 19, 219, 188, 246, 28, 231, 175, 179, 151, 159,

123, 13, 243, 206, 171, 84, 170, 125, 138, 88, 246, 156, 131, 246, 197,

236, 78, 163, 89, 104, 17, 28, 106, 18, 64, 193, 33, 227, 90, 177, 110,

19, 27, 37, 241, 170, 234, 1, 158, 5, 182, 136, 86, 179, 8, 106, 194, 120,

33, 46, 152, 172, 76, 12, 137, 78, 149, 234, 90, 175, 163, 116, 151, 79,

246, 115, 39, 123, 207, 185, 51, 172, 103, 128, 67, 125, 127, 166, 177,

150, 33, 144, 147, 27, 78, 53, 0, 103, 140, 102, 73, 207, 72, 121, 237,

117, 52, 5, 254, 217, 131, 77, 178, 67, 141, 37, 98, 141, 83, 236, 169, 7,

208, 214, 218, 222, 227, 197, 227, 193, 117, 65, 242, 156, 172, 21, 191,

29, 236, 218, 75, 43, 23, 56, 54, 40, 25, 0, 184, 183, 213, 173, 97, 115,

242, 72, 18, 53, 252, 8, 113, 100, 17, 142, 20, 214, 176, 162, 249, 182,

232, 154, 32, 224, 62, 217, 44, 170, 179, 127, 89, 114, 179, 106, 120, 79,

22, 65, 67, 198, 164, 3, 111, 206, 172, 150, 213, 150, 254, 135, 140, 168,

115, 235, 187, 133, 209, 130, 198, 73, 148, 118, 68, 81, 190, 166, 102,

178, 231, 177, 63, 177, 44, 35, 164, 182, 226, 222, 199, 42, 15, 153, 164,

190, 90, 19, 18, 3, 241, 12, 57, 121, 33, 82, 253, 178, 184, 197, 125,

212, 232, 188, 114, 139, 136, 25, 71, 196, 129, 148, 163, 56, 184, 133,

30, 83, 99, 58, 160, 62, 81, 50, 175, 199, 203, 243, 99, 7, 218, 156, 219,

228, 33, 69, 45, 133, 124, 160, 228, 89, 252, 68, 91, 178, 4, 28, 208, 15,

]

for (let i = 0; i < 6; i++) {

var cipherChunks = []

var decipher = crypto.createDecipheriv(

'aes-128-ecb',

key,

Buffer.from([])

)

decipher.setAutoPadding(false)

cipherChunks.push(

decipher.update(Buffer.from(list.slice(i * 64, (i + 1) * 64)))

)

cipherChunks.push(decipher.final())

let res = Buffer.concat(cipherChunks)

pubKey += res.toString() + '\r\n'

}

pubKey += ['q', 'Q', 'I', 'D', 'A', 'Q', 'A', 'B'].join('') + '\r\n'

pubKey +=

[

'-',

'-',

'-',

'-',

'-',

'E',

'N',

'D',

' ',

'P',

'U',

'B',

'L',

'I',

'C',

' ',

'K',

'E',

'Y',

'-',

'-',

'-',

'-',

'-',

].join('') + '\r\n'

let dec_by_pub = crypto.publicDecrypt(pubKey, data)

return dec_by_pub

}

const getOrCreateArchive = (archivePath) => {

const isCached = cachedArchives.has(archivePath)

if (isCached) {

return cachedArchives.get(archivePath)

}

const newArchive = asar.createArchive(archivePath)

if (!newArchive) return null

cachedArchives.set(archivePath, newArchive)

return newArchive

}

const CAPACITY_READ_ONLY = 9007199254740992 // Largest JS number.

// sizeof(T).

const SIZE_UINT32 = 4

const SIZE_INT32 = 4

// Aligns 'i' by rounding it up to the next multiple of 'alignment'.

const alignInt = function (i, alignment) {

return i + ((alignment - (i % alignment)) % alignment)

}

const PickleIterator = (function () {

function PickleIterator(pickle) {

this.payload = pickle.header

this.payloadOffset = pickle.headerSize

this.readIndex = 0

this.endIndex = pickle.getPayloadSize()

}

PickleIterator.prototype.readUInt32 = function () {

return this.readBytes(SIZE_UINT32, Buffer.prototype.readUInt32LE)

}

PickleIterator.prototype.readInt = function () {

return this.readBytes(SIZE_INT32, Buffer.prototype.readInt32LE)

}

PickleIterator.prototype.readString = function () {

return this.readBytes(this.readInt()).toString()

}

PickleIterator.prototype.readBytes = function (length, method) {

var readPayloadOffset = this.getReadPayloadOffsetAndAdvance(length)

if (method != null) {

return method.call(this.payload, readPayloadOffset, length)

} else {

return this.payload.slice(readPayloadOffset, readPayloadOffset + length)

}

}

PickleIterator.prototype.getReadPayloadOffsetAndAdvance = function (

length

) {

if (length > this.endIndex - this.readIndex) {

this.readIndex = this.endIndex

throw new Error('Failed to read data with length of ' + length)

}

var readPayloadOffset = this.payloadOffset + this.readIndex

this.advance(length)

return readPayloadOffset

}

PickleIterator.prototype.advance = function (size) {

var alignedSize = alignInt(size, SIZE_UINT32)

if (this.endIndex - this.readIndex < alignedSize) {

this.readIndex = this.endIndex

} else {

this.readIndex += alignedSize

}

}

return PickleIterator

})()

// implement a tiny pickle for chromium-pickle-js to read asar header

const tinyPickle = (function () {

function tinyPickle(buffer) {

this.header = buffer

this.headerSize = buffer.length - this.getPayloadSize()

this.capacityAfterHeader = CAPACITY_READ_ONLY

this.writeOffset = 0

if (this.headerSize > buffer.length) {

this.headerSize = 0

}

if (this.headerSize !== alignInt(this.headerSize, SIZE_UINT32)) {

this.headerSize = 0

}

if (this.headerSize === 0) {

this.header = Buffer.alloc(0)

}

}

tinyPickle.prototype.getPayloadSize = function () {

return this.header.readUInt32LE(0)

}

tinyPickle.prototype.createIterator = function () {

return new PickleIterator(this)

}

return tinyPickle

})()

const getOrReadArchiveHeader = (archivePath, fs) => {

if (cachedArchiveKeys[archivePath] !== undefined) {

return cachedArchiveKeys[archivePath]

}

// if (!archivePath.includes('default_app.asar')) {

const fd = cachedArchives.get(archivePath).getFd()

let buffer = Buffer.alloc(8) // for default header size

// prama: handle, buffer, ?, size, offset

fs.readSync(fd, buffer, 0, 8, 0)

const sizePickle = new tinyPickle(buffer)

let nRsaCipherLength = sizePickle.createIterator().readUInt32()

// console.log('### nRsaCipherLength:', nRsaCipherLength);

buffer = Buffer.alloc(nRsaCipherLength)

fs.readSync(fd, buffer, 0, nRsaCipherLength, 8)

// UINT32|string|string

// const rsaHeaderPickle = new tinyPickle(buffer);

// const rsa_encrypted_str = rsaHeaderPickle.createIterator().readString();

// let headerStr = Buffer.toString(); // read header str

let rsaPlaintext = decryptHeader(buffer)

// console.log('### rsaPlaintext length:', rsaPlaintext.length);

let headerPicklePickle = new tinyPickle(rsaPlaintext).createIterator()

let nAesCipherLength = headerPicklePickle.readUInt32()

let headermd5 = headerPicklePickle.readString()

// decrypt header

let aeskey = headerPicklePickle.readString()

// console.log('### rsaPlaintext, nAesCipherLength: ', nAesCipherLength, ' md5:', headermd5, ' aeskey:', aeskey);

let data = Buffer.alloc(nAesCipherLength)

fs.readSync(fd, data, 0, nAesCipherLength, 8 + nRsaCipherLength)

var cipherChunks = []

var decipher = crypto.createDecipheriv(

'aes-128-ecb',

aeskey,

Buffer.from([])

)

decipher.setAutoPadding(false)

cipherChunks.push(decipher.update(data, undefined, 'utf8'))

cipherChunks.push(decipher.final('utf8'))

let header = JSON.parse(cipherChunks.join(''))

// verify header md5

let filesStr = JSON.stringify(header.files)

// console.log('### aes decrypted: ', filesStr);

const hash = crypto.createHash('md5')

hash.update(data)

let filesMd5 = hash.digest('hex')

if (headermd5 !== filesMd5) {

// console.warn('### headermd5: ', headermd5, ' filesmd5: ', filesMd5);

throw createError(AsarError.INVALID_ARCHIVE, { archivePath, archivePath })

}

cachedArchiveKeys[archivePath] = aeskey

return aeskey

// }

// return '';

}

// Separate asar package's path from full path.

const splitPath = (archivePathOrBuffer) => {

// Shortcut for disabled asar.

if (isAsarDisabled()) return { isAsar: false }

// Check for a bad argument type.

let archivePath = archivePathOrBuffer

if (Buffer.isBuffer(archivePathOrBuffer)) {

archivePath = archivePathOrBuffer.toString()

}

if (typeof archivePath !== 'string') return { isAsar: false }

return asar.splitPath(path.normalize(archivePath))

}

// Convert asar archive's Stats object to fs's Stats object.

let nextInode = 0

const uid = process.getuid != null ? process.getuid() : 0

const gid = process.getgid != null ? process.getgid() : 0

const fakeTime = new Date()

const msec = (date) => (date || fakeTime).getTime()

const asarStatsToFsStats = function (stats) {

const { Stats, constants } = require('fs')

let mode =

constants.S_IROTH ^

constants.S_IRGRP ^

constants.S_IRUSR ^

constants.S_IWUSR

if (stats.isFile) {

mode ^= constants.S_IFREG

} else if (stats.isDirectory) {

mode ^= constants.S_IFDIR

} else if (stats.isLink) {

mode ^= constants.S_IFLNK

}

return new Stats(

1, // dev

mode, // mode

1, // nlink

uid,

gid,

0, // rdev

undefined, // blksize

++nextInode, // ino

stats.size,

undefined, // blocks,

msec(stats.atime), // atim_msec

msec(stats.mtime), // mtim_msec

msec(stats.ctime), // ctim_msec

msec(stats.birthtime) // birthtim_msec

)

}

const AsarError = {

NOT_FOUND: 'NOT_FOUND',

NOT_DIR: 'NOT_DIR',

NO_ACCESS: 'NO_ACCESS',

INVALID_ARCHIVE: 'INVALID_ARCHIVE',

}

const createError = (errorType, { asarPath, filePath } = {}) => {

let error

switch (errorType) {

case AsarError.NOT_FOUND:

error = new Error(`ENOENT, ${filePath} not found in ${asarPath}`)

error.code = 'ENOENT'

error.errno = -2

break

case AsarError.NOT_DIR:

error = new Error('ENOTDIR, not a directory')

error.code = 'ENOTDIR'

error.errno = -20

break

case AsarError.NO_ACCESS:

error = new Error(`EACCES: permission denied, access '${filePath}'`)

error.code = 'EACCES'

error.errno = -13

break

case AsarError.INVALID_ARCHIVE:

error = new Error(`Invalid package ${asarPath}`)

break

default:

throw new Error(

`Invalid error type "${errorType}" passed to createError.`

)

}

return error

}

const overrideAPISync = function (

module,

name,

pathArgumentIndex,

fromAsync

) {

if (pathArgumentIndex == null) pathArgumentIndex = 0

const old = module[name]

const func = function () {

const pathArgument = arguments[pathArgumentIndex]

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return old.apply(this, arguments)

const archive = getOrCreateArchive(asarPath)

if (!archive) throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

const newPath = archive.copyFileOut(filePath)

if (!newPath)

throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

arguments[pathArgumentIndex] = newPath

return old.apply(this, arguments)

}

if (fromAsync) {

return func

}

module[name] = func

}

const overrideAPI = function (module, name, pathArgumentIndex) {

if (pathArgumentIndex == null) pathArgumentIndex = 0

const old = module[name]

module[name] = function () {

const pathArgument = arguments[pathArgumentIndex]

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return old.apply(this, arguments)

const callback = arguments[arguments.length - 1]

if (typeof callback !== 'function') {

return overrideAPISync(module, name, pathArgumentIndex, true).apply(

this,

arguments

)

}

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

const newPath = archive.copyFileOut(filePath)

if (!newPath) {

const error = createError(AsarError.NOT_FOUND, { asarPath, filePath })

nextTick(callback, [error])

return

}

arguments[pathArgumentIndex] = newPath

return old.apply(this, arguments)

}

if (old[util.promisify.custom]) {

module[name][util.promisify.custom] = makePromiseFunction(

old[util.promisify.custom],

pathArgumentIndex

)

}

if (module.promises && module.promises[name]) {

module.promises[name] = makePromiseFunction(

module.promises[name],

pathArgumentIndex

)

}

}

const makePromiseFunction = function (orig, pathArgumentIndex) {

return function (...args) {

const pathArgument = args[pathArgumentIndex]

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return orig.apply(this, args)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

return Promise.reject(

createError(AsarError.INVALID_ARCHIVE, { asarPath })

)

}

const newPath = archive.copyFileOut(filePath)

if (!newPath) {

return Promise.reject(

createError(AsarError.NOT_FOUND, { asarPath, filePath })

)

}

args[pathArgumentIndex] = newPath

return orig.apply(this, args)

}

}

// Override fs APIs.

exports.wrapFsWithAsar = (fs) => {

const logFDs = {}

const logASARAccess = (asarPath, filePath, offset) => {

if (!process.env.ELECTRON_LOG_ASAR_READS) return

if (!logFDs[asarPath]) {

const path = require('path')

const logFilename = `${path.basename(asarPath, '.asar')}-access-log.txt`

const logPath = path.join(require('os').tmpdir(), logFilename)

logFDs[asarPath] = fs.openSync(logPath, 'a')

}

fs.writeSync(logFDs[asarPath], `${offset}: ${filePath}\n`)

}

const { lstatSync } = fs

fs.lstatSync = (pathArgument, options) => {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (!isAsar) return lstatSync(pathArgument, options)

const archive = getOrCreateArchive(asarPath)

if (!archive) throw createError(AsarError.INVALID_ARCHIVE, { asarPath })

const stats = archive.stat(filePath)

if (!stats) throw createError(AsarError.NOT_FOUND, { asarPath, filePath })

return asarStatsToFsStats(stats)

}

const { lstat } = fs

fs.lstat = function (pathArgument, options, callback) {

const { isAsar, asarPath, filePath } = splitPath(pathArgument)

if (typeof options === 'function') {

callback = options

options = {}

}

if (!isAsar) return lstat(pathArgument, options, callback)

const archive = getOrCreateArchive(asarPath)

if (!archive) {

const error = createError(AsarError.INVALID_ARCHIVE, { asarPath })

nextTick(callback, [error])

return

}

赞赏记录

参与人

雪币

留言

时间

zgFree

谢谢你的细致分析,受益匪浅!

2024-10-24 10:33

nig

期待更多优质内容的分享,论坛有你更精彩!

2024-10-21 08:58

赞赏

赞赏

雪币:

留言: